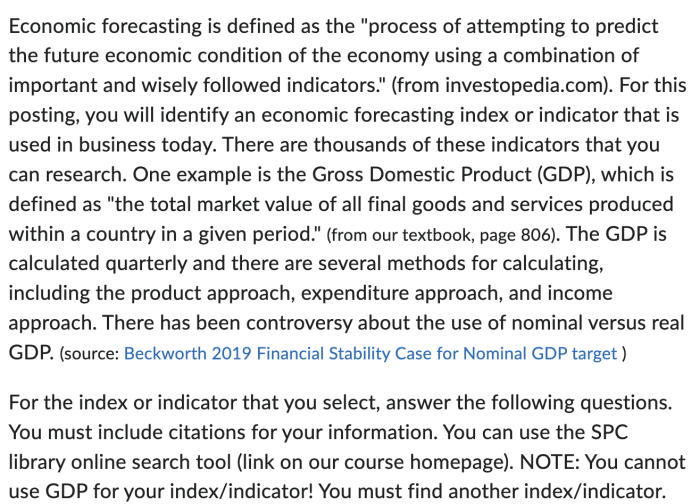

Economic forecasting, a crucial element in navigating the complexities of the global economy, involves predicting future economic trends. This review explores a range of established and emerging methods, from traditional econometric models and time series analysis to the increasingly influential applications of big data and machine learning. We delve into both quantitative and qualitative approaches, examining their strengths, weaknesses, and practical applications, ultimately aiming to provide a comprehensive understanding of this vital field.

The accuracy of economic forecasts significantly impacts policy decisions, investment strategies, and overall economic stability. Understanding the underlying methodologies and their inherent limitations is therefore paramount for informed decision-making. This review will explore the historical development of forecasting techniques, compare and contrast various approaches, and analyze both successful and unsuccessful case studies to illustrate the practical application and challenges involved in economic forecasting.

Introduction to Economic Forecasting Methods

Accurate economic forecasting is crucial for informed decision-making across various sectors, from governments and central banks managing monetary and fiscal policies to businesses planning investments and production. Predicting economic trends allows stakeholders to anticipate challenges, capitalize on opportunities, and mitigate potential risks, ultimately contributing to economic stability and growth. Without reliable forecasts, resource allocation becomes inefficient, leading to missed opportunities and potentially negative economic consequences.

Economic forecasting methods have evolved significantly over time. Early methods relied heavily on qualitative judgments and simple extrapolations of past data. The development of econometrics in the 20th century revolutionized the field, introducing sophisticated statistical techniques to model economic relationships. The rise of computing power further enhanced forecasting capabilities, enabling the use of complex models and large datasets. More recently, the integration of machine learning and artificial intelligence has opened up new avenues for improving forecast accuracy and efficiency. This evolution reflects a continuous effort to improve the understanding of economic dynamics and to develop more accurate predictive tools.

Limitations of Economic Forecasting Models

Despite advancements, all economic forecasting models inherently possess limitations. These limitations stem from several factors, including the inherent complexity of economic systems, the availability and quality of data, and the unpredictable nature of human behavior. Economic models, by their nature, are simplifications of reality, omitting numerous intricate interactions and feedback loops. Data limitations, such as incomplete or inaccurate information, can significantly impact the reliability of forecasts. Furthermore, unforeseen events, such as natural disasters or geopolitical crises, can drastically alter economic trajectories, rendering even the most sophisticated models inaccurate. For instance, the COVID-19 pandemic dramatically impacted global economic forecasts, highlighting the inherent uncertainty in predicting future economic conditions. Another example would be the unexpected surge in inflation in 2021-2022, which many forecasting models failed to accurately predict, emphasizing the challenges in anticipating significant shifts in economic fundamentals. The inherent uncertainty associated with human behavior and its impact on economic decisions also plays a significant role in the limitations of economic forecasting. Consumer and business confidence, for example, can be highly volatile and difficult to predict precisely.

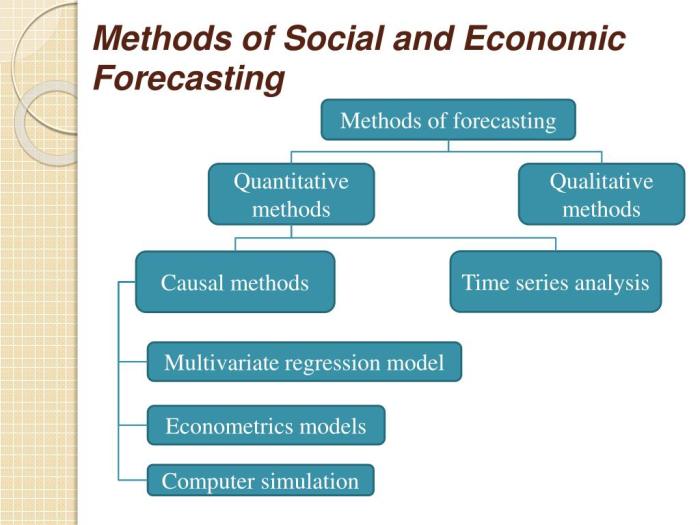

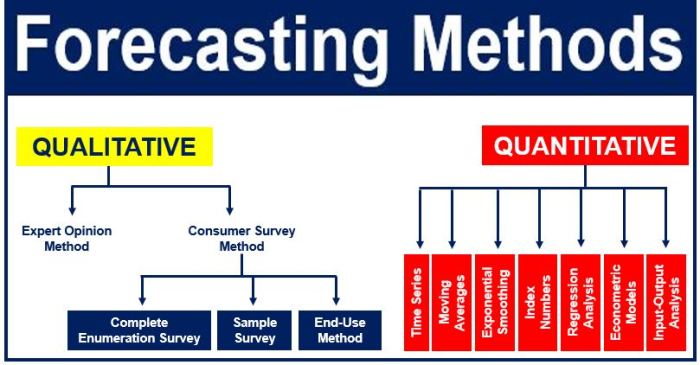

Quantitative Forecasting Methods

Quantitative forecasting methods rely on mathematical and statistical models to predict future economic outcomes. These methods offer a structured approach, allowing for the incorporation of numerous variables and the quantification of uncertainty. This contrasts with qualitative methods, which rely more heavily on expert judgment and less on numerical data. We will explore two prominent quantitative approaches: econometric modeling and time series analysis, along with the use of leading indicators.

Econometric Modeling and Time Series Analysis: A Comparison

Econometric modeling and time series analysis are both quantitative forecasting techniques, but they differ significantly in their approach and application. Econometric models focus on establishing relationships between economic variables, often using regression analysis to identify causal links. Time series analysis, on the other hand, focuses solely on the historical pattern of a single variable to predict its future values. Econometric models are better suited for understanding the underlying economic mechanisms driving change, while time series analysis is more useful when dealing with variables that exhibit strong autocorrelation or when limited variables are available. A key difference lies in the data used; econometric models often employ cross-sectional data alongside time series data, whereas time series analysis relies exclusively on time series data.

Econometric Model for Predicting GDP Growth

A hypothetical econometric model to predict GDP growth (GDPG) might include variables like consumer spending (C), investment (I), government spending (G), and net exports (NX). We can posit a simple linear model:

GDPGt = β0 + β1Ct + β2It + β3Gt + β4NXt + εt

where:

* GDPGt represents GDP growth in period t.

* Ct, It, Gt, and NXt represent consumer spending, investment, government spending, and net exports in period t, respectively.

* β0 is the intercept.

* β1, β2, β3, and β4 are the coefficients representing the impact of each variable on GDP growth.

* εt is the error term.

The expected relationships are as follows:

| Variable | Expected Relationship with GDPG | Rationale |

|---|---|---|

| Consumer Spending (C) | Positive (β1 > 0) | Increased consumer spending drives economic growth. |

| Investment (I) | Positive (β2 > 0) | Investment in capital goods boosts productivity and growth. |

| Government Spending (G) | Positive (β3 > 0) | Government spending can stimulate demand and growth. |

| Net Exports (NX) | Positive (β4 > 0) | Positive net exports contribute to aggregate demand. |

This model, of course, is simplified. A real-world model would include more variables, potentially lagged variables to account for time lags in economic effects, and might employ more sophisticated techniques to account for non-linear relationships or heteroscedasticity. For example, the impact of monetary policy (interest rates) or changes in oil prices could be added for a more robust prediction.

Applying ARIMA Modeling for Inflation Forecasting

ARIMA (Autoregressive Integrated Moving Average) modeling is a powerful time series technique for forecasting inflation. The application involves a step-by-step process:

1. Data Preparation: Obtain a historical time series of inflation data (e.g., Consumer Price Index (CPI) inflation rate). The data should be stationary, meaning its statistical properties (mean, variance) do not change over time. Differencing may be necessary to achieve stationarity.

2. Model Identification: Analyze the autocorrelation function (ACF) and partial autocorrelation function (PACF) of the stationary data to identify the appropriate AR (autoregressive), I (integrated), and MA (moving average) orders (p, d, q) for the ARIMA(p,d,q) model. This involves identifying significant lags in the ACF and PACF plots.

3. Model Estimation: Estimate the parameters of the chosen ARIMA(p,d,q) model using statistical software. This involves fitting the model to the historical data and obtaining estimates for the AR and MA coefficients.

4. Model Diagnostics: Assess the model’s goodness-of-fit using diagnostic tools such as residual analysis. This checks if the residuals are randomly distributed and free from autocorrelation, indicating a well-specified model.

5. Forecasting: Use the estimated ARIMA model to generate forecasts for future periods. Confidence intervals can also be generated to quantify the uncertainty associated with the forecasts. For instance, a model might predict 2% inflation for the next quarter, with a 95% confidence interval of 1.5% to 2.5%. The accuracy of the forecasts depends heavily on the quality of the data and the appropriateness of the chosen model. Real-world examples often involve iterative model refinement based on updated data and performance evaluation.

Advantages and Disadvantages of Leading Indicators in Forecasting

Leading indicators are economic variables that tend to change before changes in the overall economy. Their use in forecasting offers valuable insights but also presents certain challenges.

- Advantages:

- Early warning signals: Leading indicators provide early warnings of potential economic turning points, allowing businesses and policymakers to prepare.

- Improved forecasting accuracy: When used in conjunction with other forecasting methods, leading indicators can enhance predictive accuracy.

- Proactive decision-making: Early insights facilitate proactive decision-making, minimizing the impact of economic downturns.

- Disadvantages:

- False signals: Leading indicators can sometimes generate false signals, leading to inaccurate forecasts.

- Lagging indicators: Sometimes the lead time might be short, rendering the indicators less useful for long-term predictions.

- Data revisions: Leading indicator data is often subject to revisions, affecting the reliability of forecasts based on these indicators.

- Interpretation challenges: Interpreting the signals from multiple leading indicators can be complex and require significant expertise.

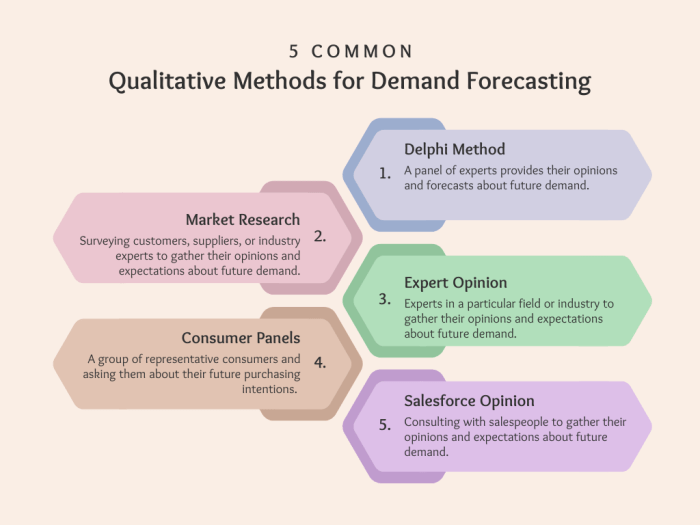

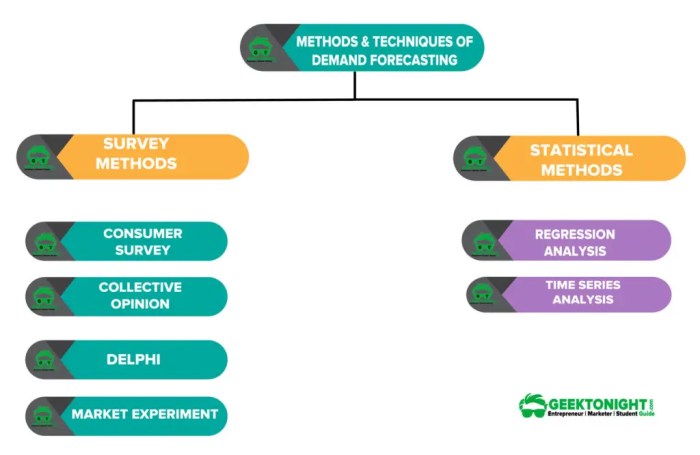

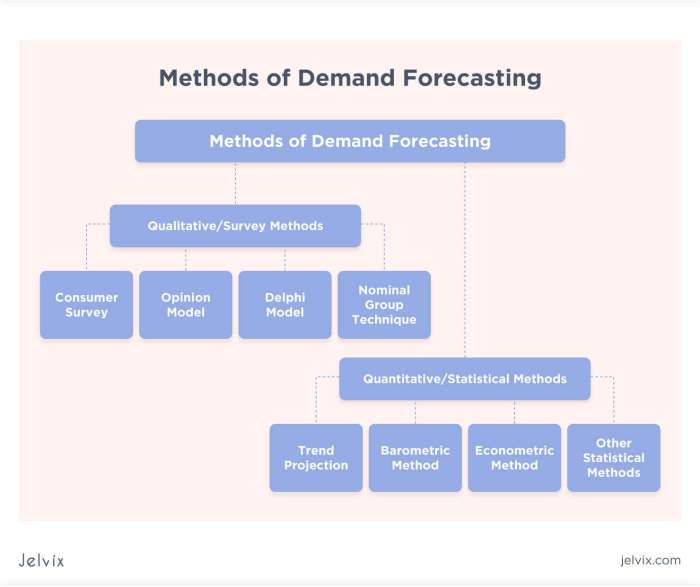

Qualitative Forecasting Methods

Qualitative forecasting methods rely on subjective judgments and opinions rather than purely numerical data. These methods are particularly useful when historical data is scarce, unreliable, or irrelevant to the future economic landscape, or when dealing with complex, unpredictable events. They often complement quantitative methods, providing valuable insights that numerical models might miss.

The Role of Expert Opinion and Delphi Methods

Expert opinion plays a crucial role in qualitative forecasting. Economists, industry analysts, and other specialists offer their insights and predictions based on their knowledge and experience. The Delphi method is a structured communication technique designed to elicit expert opinions in a systematic way. It involves multiple rounds of questionnaires and feedback, aiming to reach a consensus among experts, while minimizing the influence of individual biases. For example, the International Monetary Fund (IMF) frequently uses expert panels and surveys to gather qualitative assessments of global economic conditions, supplementing their quantitative models. These qualitative inputs inform their overall outlook and projections.

Examples of Qualitative Methods Complementing Quantitative Approaches

Qualitative methods can significantly enhance quantitative forecasting. For instance, a quantitative model might predict strong economic growth based on historical trends, but qualitative insights from industry experts could reveal potential disruptions, such as supply chain bottlenecks or regulatory changes, that could significantly impact the predicted growth rate. A company might use econometric models to forecast sales, but combine that with market research (focus groups, surveys) to understand consumer sentiment and potential shifts in demand – providing a more nuanced and realistic prediction.

Potential Biases in Qualitative Forecasting

Relying solely on qualitative forecasting techniques carries inherent risks. Expert opinions can be subjective and biased, reflecting individual perspectives, personal interests, or even unconscious biases. Groupthink, where the desire for consensus overrides critical evaluation, can also skew the results. For example, over-optimistic projections during economic booms or overly pessimistic views during recessions can be driven by such biases. The Delphi method helps mitigate this to some extent, but the potential for bias remains.

Comparison of Qualitative Methods for Short-Term vs. Long-Term Forecasting

The suitability of different qualitative methods varies depending on the forecasting horizon.

| Method | Short-Term Suitability | Long-Term Suitability | Description |

|---|---|---|---|

| Market Research (Surveys, Focus Groups) | High | Medium | Provides insights into current consumer preferences and behavior, useful for short-term sales forecasting. Less reliable for long-term trends. |

| Expert Panels | Medium | High | Experts can provide valuable insights into near-term developments, but their long-term projections are also valuable, though more uncertain. |

| Delphi Method | Medium | High | The iterative nature of the Delphi method makes it suitable for both short-term and long-term forecasting, but the process can be time-consuming. |

| Scenario Planning | Low | High | Best suited for long-term forecasting, exploring different potential futures and their implications. Less effective for short-term predictions. |

Emerging Trends in Economic Forecasting

The field of economic forecasting is undergoing a rapid transformation, driven by advancements in data science, computing power, and theoretical modeling. Traditional econometric methods are being augmented, and in some cases replaced, by more sophisticated techniques capable of handling the increasing volume and complexity of available economic data. This shift promises more accurate and timely forecasts, but also presents new challenges related to data quality, model interpretability, and the potential for bias.

The integration of big data and machine learning is revolutionizing economic forecasting. These methods allow for the analysis of vast datasets, including unstructured data like social media posts and news articles, to identify patterns and relationships that might be missed by traditional methods. This increased data granularity can lead to more nuanced and accurate predictions.

Big Data and Machine Learning in Economic Forecasting

Machine learning algorithms, such as neural networks and support vector machines, are particularly well-suited for identifying non-linear relationships within complex economic datasets. For example, analyzing transactional data from online retailers can provide insights into consumer spending habits and predict future demand with greater precision than traditional aggregate measures. Similarly, sentiment analysis of social media posts can offer early warning signals of economic shifts or changes in consumer confidence. The application of these techniques, however, requires careful consideration of data quality, potential biases, and the interpretability of the resulting models. Over-reliance on complex “black box” models can hinder understanding the underlying economic mechanisms driving the forecasts.

Agent-Based Modeling in Economic Simulations

Agent-based modeling (ABM) offers a powerful approach to simulating complex economic systems. Unlike traditional macroeconomic models that rely on aggregate relationships, ABM simulates the interactions of individual agents (e.g., consumers, firms, governments) based on their own rules and behaviors. This allows for the exploration of emergent phenomena and the impact of policy interventions in a more realistic and detailed manner. For example, ABM can be used to simulate the spread of financial contagion in a banking system or the impact of a new tax policy on regional economic growth. The complexity of ABM models, however, requires significant computational resources and careful calibration to ensure realistic behavior.

Artificial Intelligence in Economic Forecasting: Challenges and Opportunities

The use of artificial intelligence (AI) in economic forecasting presents both significant opportunities and considerable challenges. AI algorithms can process massive datasets, identify complex patterns, and generate forecasts with greater speed and accuracy than traditional methods. However, the “black box” nature of many AI algorithms can make it difficult to understand the reasons behind the forecasts, raising concerns about transparency and accountability. Furthermore, the potential for bias in the training data and the risk of overfitting can lead to inaccurate or misleading predictions. Addressing these challenges requires careful model selection, rigorous validation, and the development of methods for explaining AI-driven forecasts. One example of AI’s application is in predicting inflation rates, where AI algorithms can analyze a wider range of data points (including real-time data from online marketplaces) to produce more timely and potentially accurate forecasts than traditional methods.

Advancements in Computing Power and Forecasting Methodologies

The exponential growth in computing power has significantly impacted economic forecasting methodologies. The ability to process and analyze massive datasets in real-time has enabled the development and application of more sophisticated forecasting models, including those based on machine learning and agent-based modeling. High-performance computing also allows for the exploration of a wider range of scenarios and the development of more robust forecasting systems. For instance, the ability to run complex simulations with many variables and iterations allows for a deeper understanding of potential economic outcomes under different policy scenarios, something previously computationally infeasible. This has led to a more data-driven and scenario-planning approach to economic forecasting.

Case Studies of Economic Forecasts

Analyzing successful and unsuccessful economic forecasts provides invaluable insights into the strengths and weaknesses of various forecasting methodologies. Understanding these contrasting cases helps refine future forecasting practices and improve accuracy. This section will examine specific examples, highlighting the methodologies employed and the factors contributing to their success or failure.

Successful Forecast: The 1990s Asian Financial Crisis (Partial Success)

The International Monetary Fund (IMF) in the lead-up to the 1997 Asian Financial Crisis, while not perfectly predicting the crisis’s timing or severity, issued warnings about vulnerabilities in several Asian economies. Their methodology relied heavily on quantitative models analyzing macroeconomic indicators like current account deficits, exchange rate pressures, and rapid credit growth. While these models didn’t precisely pinpoint the crisis’s onset, the identified vulnerabilities were later proven correct, highlighting the value of early warning systems based on rigorous data analysis. The IMF’s success lay in its ability to identify systemic risks through the application of established quantitative techniques, even if the precise timing and impact were underestimated. The limitations in the model were its inability to fully capture the contagion effect and the speed at which the crisis unfolded. This highlights the challenge of incorporating qualitative factors like investor sentiment and political instability into purely quantitative models.

Failed Forecast: The 2008 Global Financial Crisis

Many leading economic institutions failed to adequately predict the severity and speed of the 2008 Global Financial Crisis. These failures stemmed from several contributing factors. Overreliance on historical data and assumptions of market efficiency led to underestimation of the risks associated with complex financial instruments like mortgage-backed securities. Furthermore, a lack of attention to systemic risks within the interconnected global financial system hindered accurate forecasting. The models used often failed to account for the potential for cascading failures and the rapid propagation of shocks across different markets. The qualitative factors, such as the widespread lack of regulatory oversight and the inherent instability of the subprime mortgage market, were not adequately incorporated into the prevailing quantitative models. This led to a significant underestimation of the potential for a major economic downturn.

Comparison of Forecasting Approaches

The IMF’s approach in the lead-up to the Asian Financial Crisis, while not perfectly predictive, showcased the strength of using quantitative models to identify macroeconomic vulnerabilities. The models, while not perfectly predictive, provided early warnings of potential instability. In contrast, the failure to predict the 2008 Global Financial Crisis highlights the limitations of relying solely on quantitative models and historical data, particularly when dealing with complex, interconnected systems and unforeseen events. The 2008 crisis underscores the need for a more holistic approach that integrates qualitative assessments of systemic risks, regulatory environments, and potential triggers for unexpected market disruptions. The success of the IMF’s analysis, albeit partial, rests on the consistent application of established quantitative methodologies, while the failure in 2008 demonstrates the shortcomings of ignoring qualitative factors and systemic risks.

Lessons Learned from Case Studies

The following points summarize key lessons from the analyzed case studies:

- Over-reliance on quantitative models without incorporating qualitative factors can lead to inaccurate forecasts.

- Understanding systemic risks and interconnectedness within financial systems is crucial for accurate forecasting.

- Regular review and refinement of forecasting methodologies are essential to adapt to changing economic conditions.

- A combination of quantitative and qualitative methods often provides a more comprehensive and accurate picture.

- Acknowledging limitations of models and incorporating uncertainty into forecasts is crucial for responsible forecasting.

Evaluating Forecasting Accuracy

Accurately assessing the performance of economic forecasts is crucial for informed decision-making. Choosing the right evaluation method depends on the specific context and the goals of the forecast. Several metrics exist to quantify forecast accuracy, each with its strengths and weaknesses.

Different methods exist for evaluating the accuracy of economic forecasts, allowing for a comprehensive understanding of forecast performance. These methods help to identify areas for improvement in forecasting models and techniques. A thorough evaluation is essential for building trust and confidence in the forecasts.

Forecast Accuracy Metrics

Several quantitative metrics are commonly used to evaluate forecast accuracy. These metrics compare the predicted values to the actual observed values. Understanding the nuances of each metric is essential for selecting the most appropriate one for a given application.

| Metric | Formula | Strengths | Weaknesses |

|---|---|---|---|

| Root Mean Squared Error (RMSE) | √[Σ(Actuali – Forecasti)² / n] | Considers the magnitude of errors; sensitive to large errors. Widely used and easily interpretable. | Sensitive to outliers; doesn’t directly reflect the average error magnitude. |

| Mean Absolute Error (MAE) | Σ|Actuali – Forecasti| / n | Easy to understand and calculate; less sensitive to outliers than RMSE. | Doesn’t penalize larger errors as heavily as RMSE. |

| Mean Absolute Percentage Error (MAPE) | Σ| (Actuali – Forecasti) / Actuali | * 100 / n | Provides a percentage error, easily interpretable in relative terms. | Undefined when Actuali = 0; sensitive to small values of Actuali; can be misleading when comparing forecasts with different scales. |

| Mean Squared Error (MSE) | Σ(Actuali – Forecasti)² / n | Considers the magnitude of errors; emphasizes larger errors. Provides a basis for RMSE calculation. | Sensitive to outliers; units are squared, making interpretation less intuitive than RMSE or MAE. |

Where:

Actuali represents the actual observed value at time i.

Forecasti represents the forecasted value at time i.

n represents the total number of observations.

Interpreting Forecast Error Statistics

Interpreting forecast error statistics involves more than just looking at a single number. For instance, a low RMSE value suggests a good fit, but it’s important to consider the context. A low RMSE for forecasting GDP growth might be acceptable, while a similar RMSE for forecasting stock prices could be considered quite high. The interpretation must consider the scale of the variable being forecast and the acceptable error tolerance for decision-making. For example, a small RMSE in predicting the price of a commodity might be acceptable, whereas the same RMSE in predicting the number of hospital beds needed during a pandemic would be alarmingly high.

Consider a scenario where a company is forecasting sales for the next quarter. If the RMSE of the forecast is significantly higher than the company’s acceptable error margin, this might signal a need to refine the forecasting model or gather more accurate data. Conversely, a low RMSE could indicate confidence in the forecast and allow for better inventory management or resource allocation.

Forecast Uncertainty

Considering forecast uncertainty is paramount. No forecast is perfect; inherent uncertainty arises from limitations in data, model assumptions, and unforeseen events. Quantifying this uncertainty through techniques like confidence intervals or prediction intervals is crucial. For instance, instead of providing a single point estimate for next year’s inflation, a forecast might provide a range (e.g., 2% to 4%), reflecting the uncertainty associated with the prediction. This allows decision-makers to understand the potential range of outcomes and plan accordingly. Ignoring uncertainty can lead to overly optimistic or pessimistic decisions, potentially resulting in significant financial or operational consequences. A realistic assessment of uncertainty allows for more robust and adaptable strategies.

Final Summary

From classical econometric models to the cutting-edge applications of artificial intelligence, this review has highlighted the diverse landscape of economic forecasting methods. While no method guarantees perfect accuracy, a nuanced understanding of their strengths and limitations, coupled with a critical evaluation of forecast uncertainty, is crucial for effective decision-making. The ongoing evolution of technology and data availability promises further advancements in forecasting capabilities, presenting both opportunities and challenges for economists and policymakers alike. Ultimately, the most effective approach often involves a blend of quantitative and qualitative methods, informed by a deep understanding of the specific economic context.

FAQ Overview

What are the ethical considerations in using economic forecasts?

Ethical considerations include transparency in methodology, avoiding bias in data selection and interpretation, and acknowledging the inherent uncertainty in forecasts. Misrepresentation or misuse of forecasts for personal gain is unethical.

How frequently are economic forecasts updated?

The frequency of updates varies depending on the forecasting method and the economic variable being predicted. Some forecasts are updated daily, while others are updated monthly, quarterly, or annually.

What is the role of government in economic forecasting?

Governments often utilize economic forecasts to inform policy decisions, budget planning, and resource allocation. Government agencies may conduct their own forecasts or commission them from independent researchers.