Financial Data Analytics Tools Review: Unlocking the secrets hidden within financial data requires the right tools. This review dives into the exciting world of software designed to wrangle, analyze, and visualize financial information, from simple spreadsheets to sophisticated AI-powered platforms. We’ll explore the features, benefits, and potential pitfalls of various tools, helping you choose the perfect fit for your needs – whether you’re a seasoned quant or a curious beginner.

We’ll cover everything from data acquisition and preparation to advanced analytical methods and compelling data visualization. Expect insightful comparisons, practical examples, and a healthy dose of humor to keep things interesting. Get ready to embark on a journey into the heart of financial data analysis!

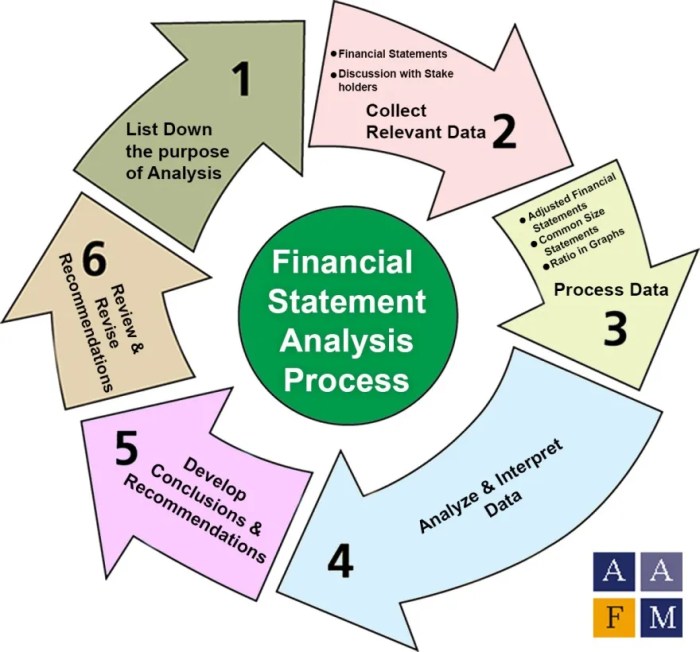

Introduction to Financial Data Analytics Tools

The world of finance is drowning in data – a delightful deluge of numbers, transactions, and market movements. To navigate this ocean of information and extract valuable insights, financial institutions and analysts rely on a diverse array of data analytics tools. Choosing the right tool can be the difference between making shrewd, profitable decisions and… well, let’s just say you wouldn’t want to find yourself on the wrong side of that equation.

The landscape of financial data analytics tools is surprisingly varied, ranging from familiar spreadsheet software to highly specialized statistical packages and powerful cloud-based platforms. Each category caters to different needs and skill levels, offering a spectrum of functionality and user experience. Understanding these differences is crucial for selecting the optimal tool for your specific analytical requirements.

Tool Categories and Key Differentiating Features

Financial data analytics tools can be broadly categorized into several groups, each with its own strengths and weaknesses. Spreadsheet software, such as Microsoft Excel or Google Sheets, provides a familiar and accessible entry point for basic data analysis. While powerful in their own right, their capabilities are limited when dealing with extremely large datasets or complex statistical modeling.

Specialized statistical packages, like R or SAS, offer significantly more advanced statistical capabilities and are preferred by quantitative analysts and researchers needing to perform sophisticated analyses. However, these tools often require a steeper learning curve and may not be as user-friendly for those without a strong statistical background. They are also typically more expensive.

Cloud-based platforms, such as Tableau, Power BI, or Alteryx, represent a relatively newer breed of tools. They offer a combination of user-friendly interfaces, powerful visualization capabilities, and scalable infrastructure, making them suitable for a wide range of users and data sizes. These platforms are often subscription-based, offering varying levels of functionality depending on the pricing tier. The cost can be a significant factor for smaller organizations.

Comparison of Three Prominent Tools

The choice of the “best” tool is highly subjective and depends on your specific needs and budget. However, comparing three prominent tools across key features can provide valuable insights. Below is a comparison of Microsoft Excel, R, and Tableau.

| Feature | Microsoft Excel | R | Tableau |

|---|---|---|---|

| Pricing | One-time purchase or subscription | Open-source (free), but commercial support available | Subscription-based, various tiers |

| Functionality | Basic data manipulation, charting, and some statistical functions | Extensive statistical modeling, data manipulation, and customizability | Data visualization, data blending, and interactive dashboards |

| User Interface | Highly intuitive and user-friendly | Steeper learning curve, requires coding knowledge | Relatively user-friendly, drag-and-drop interface |

Data Acquisition and Preparation

The journey of a thousand data points begins with a single… well, a lot of data. Before we can unleash the power of financial data analytics tools, we must first wrestle our data into submission. This involves acquiring the raw material, cleaning it up (think of it as a financial spa day), and preparing it for analysis. Get ready for a wild ride, because this is where the real fun begins (or at least, the part where you might need a strong cup of coffee).

Data acquisition and preparation is the often-overlooked, yet critically important, foundation upon which all successful financial data analysis is built. Without properly cleaned and prepared data, even the most sophisticated analytical tools will produce unreliable and potentially misleading results. Think of it as trying to bake a cake with rotten eggs – the outcome won’t be pretty.

Common Financial Data Sources

Financial data comes from a variety of sources, each with its own quirks and challenges. These sources range from readily available public databases to proprietary datasets requiring specialized access and often a hefty price tag. Understanding these different sources is crucial for selecting the appropriate data for your analysis and ensuring data quality.

- Public Databases: These include sources like the Federal Reserve Economic Data (FRED), the World Bank, and various stock exchanges (e.g., NYSE, NASDAQ). These databases offer a wealth of free or low-cost information, but often require some technical skill to navigate and download effectively.

- Financial News and Media Outlets: Websites and publications such as Bloomberg, Reuters, and the Financial Times provide real-time market data, news articles, and analyst reports. Extracting data from these sources often involves web scraping techniques, which can be challenging.

- Company Financial Statements: Securities and Exchange Commission (SEC) filings (like 10-K and 10-Q reports) are essential for analyzing the financial health of publicly traded companies. These reports contain detailed financial information, but require careful parsing and interpretation.

- Proprietary Databases: Commercial providers like Refinitiv and Bloomberg Terminal offer comprehensive financial data sets, but these usually come with significant subscription fees.

Data Cleaning and Transformation

Once you’ve gathered your data, the real work begins. Data cleaning is the process of identifying and correcting (or removing) errors, inconsistencies, and inaccuracies. This often involves a multi-step process, like a meticulous surgeon preparing for a delicate operation.

- Handling Missing Values: Missing data is a common problem. Strategies for dealing with this include imputation (filling in missing values based on existing data) or removing rows or columns with excessive missing data. The choice depends on the extent of missing data and the nature of the analysis.

- Outlier Detection and Treatment: Outliers are data points that significantly deviate from the rest of the data. These can be due to errors or genuine extreme values. Methods for handling outliers include removing them, transforming them (e.g., using logarithmic transformation), or using robust statistical methods less sensitive to outliers.

- Data Transformation: This involves converting data into a format suitable for analysis. Common transformations include standardization (centering data around zero with unit variance), normalization (scaling data to a specific range), and creating dummy variables for categorical data.

- Data Consolidation: This involves merging data from multiple sources into a unified dataset. This often requires careful attention to data consistency and matching keys.

Handling Missing Data and Outliers

Missing data and outliers are the bane of every data analyst’s existence. They can significantly skew results and lead to inaccurate conclusions. Therefore, a robust strategy for handling them is crucial.

“Garbage in, garbage out” – A timeless adage in data analysis.

For example, imputing missing values using the mean can be a simple approach, but it might not be appropriate if the data is not normally distributed. Similarly, simply removing outliers can lead to loss of valuable information if those outliers represent genuine extreme events. Careful consideration and selection of appropriate techniques are key.

Importing Financial Data into an Analytics Tool

Let’s assume we’ve chosen a popular tool like Python with Pandas. Here’s a step-by-step guide (this is a simplified example, and the specifics will depend on your chosen tool and data source):

- Install necessary libraries:

pip install pandas - Import the library:

import pandas as pd - Read the data: This depends on the data format. For a CSV file:

data = pd.read_csv('your_file.csv'). For an Excel file:data = pd.read_excel('your_file.xlsx'). - Clean and transform the data: This involves using Pandas functions to handle missing values, outliers, and perform data transformations as described earlier.

- Explore and analyze: Use Pandas and other libraries (like Matplotlib and Seaborn for visualization) to explore the data and perform your analysis.

Exploratory Data Analysis (EDA) Techniques

Unleashing the power of EDA in finance is like becoming a financial Sherlock Holmes – you get to sift through mountains of data, searching for clues to unlock hidden patterns and predict market movements (before your rivals, naturally). EDA isn’t just about crunching numbers; it’s about telling a story with your data, a story that whispers secrets of profitability and risk.

EDA techniques applied to financial data provide a crucial first step in understanding complex financial information. By visually exploring and summarizing the data, analysts can identify trends, outliers, and relationships that might otherwise be missed through purely statistical methods. This process informs subsequent model building and decision-making, significantly improving the accuracy and reliability of financial analysis.

Histograms: Unveiling the Distribution

Histograms are a fantastic visual tool for understanding the distribution of your financial data. Imagine you’re analyzing daily stock returns. A histogram would show you how often different return ranges (e.g., -5% to 0%, 0% to 5%, 5% to 10%) occur. A perfectly symmetrical histogram suggests a normal distribution (a beautiful sight for a quant!), while a skewed histogram might indicate the presence of outliers or non-normality, requiring further investigation. For example, a right-skewed histogram of investment returns could suggest the presence of a few very high-return investments, while the majority of returns are clustered at lower values.

Scatter Plots: Unveiling Relationships

Scatter plots are your go-to tool for exploring the relationship between two variables. Let’s say you want to see if there’s a correlation between a company’s advertising spend and its sales revenue. A scatter plot would display each data point (advertising spend, sales revenue) as a dot on a graph. A positive correlation would show points clustered along an upward-sloping line (more advertising, more sales – a dream!), a negative correlation would show a downward slope, and no correlation would show a random scatter. Identifying these relationships helps in building predictive models. For instance, a strong positive correlation might suggest that increased marketing expenditure leads to higher sales.

Box Plots: Spotting Outliers and Medians

Box plots are excellent for summarizing the distribution of a single variable and identifying potential outliers. They visually represent the median, quartiles, and range of the data. In analyzing credit scores, for instance, a box plot would display the median credit score, the interquartile range (the middle 50% of scores), and any outliers (extremely high or low credit scores). These outliers might represent individuals with unusual financial situations requiring further scrutiny. This helps in identifying unusual data points that warrant further investigation, possibly indicating errors or unique circumstances.

Best Practices for Effective EDA

Before diving into the exciting world of EDA, remember these best practices to ensure your analysis is as insightful (and hilarious) as possible:

The following points highlight key considerations for performing effective EDA:

- Start with a clear objective: What questions are you trying to answer with your data? Knowing your goal will guide your analysis.

- Clean your data: Garbage in, garbage out! Handle missing values, outliers, and inconsistencies before proceeding.

- Visualize, visualize, visualize: Pictures speak louder than numbers. Use various plots to explore your data from different angles.

- Document your findings: Keep a record of your analyses, insights, and any decisions made based on your EDA.

- Iterate and refine: EDA is an iterative process. Your initial findings may lead to new questions and further analysis.

Advanced Analytical Methods

Now that we’ve wrestled with the basics of data acquisition and exploration, let’s unleash the kraken of advanced analytical methods! This is where the real fun begins – the sophisticated statistical sleuthing and predictive prowess that can turn raw financial data into actionable insights (and maybe even a hefty bonus). Buckle up, buttercup.

We’ll delve into the powerful techniques that separate the financial data analysts from the financial data…well, enthusiasts. These methods allow us to uncover hidden relationships, predict future trends, and make more informed decisions than a seasoned Wall Street veteran (okay, maybe not *that* seasoned, but you get the idea).

Regression Models in Finance

Regression models are the workhorses of financial analysis, allowing us to model the relationship between a dependent variable (like stock price) and one or more independent variables (like interest rates, inflation, or the price of avocado toast – because, let’s be honest, it affects everything). Linear regression assumes a linear relationship, while logistic regression predicts the probability of a binary outcome (e.g., will a company default on its loan?). The choice of model depends heavily on the nature of the dependent variable and the assumptions we can reasonably make about the data. For instance, predicting stock prices might use linear regression, while predicting bankruptcy would leverage logistic regression’s ability to handle binary outcomes. In practice, we’d often employ techniques like regularization (LASSO or Ridge) to prevent overfitting, especially with datasets containing numerous variables. Tools like R, Python’s statsmodels, and even Excel’s Data Analysis Toolpak can be used to implement these models.

Time Series Analysis for Financial Forecasting

Predicting the future is a risky business, even for those with crystal balls (which, sadly, are not standard equipment for financial analysts). However, time series analysis offers a structured approach to forecasting financial variables like stock prices, exchange rates, or interest rates. Techniques like ARIMA (Autoregressive Integrated Moving Average) models analyze the historical patterns in data to extrapolate future values. More sophisticated methods, like GARCH (Generalized Autoregressive Conditional Heteroskedasticity) models, account for the volatility clustering often observed in financial markets – meaning periods of high volatility tend to be followed by more high volatility, and vice versa. These models are typically implemented using specialized packages in R (like “forecast”) or Python (like “statsmodels”). For example, a bank might use ARIMA models to forecast loan demand based on past trends.

Machine Learning in Financial Modeling

Machine learning algorithms are bringing a whole new level of sophistication (and a healthy dose of excitement) to financial modeling. Decision trees, for instance, can be used to classify borrowers into high-risk and low-risk categories based on a variety of factors, offering a clear and interpretable model. Neural networks, with their complex, interconnected layers, are capable of identifying non-linear relationships in data, making them suitable for complex prediction tasks such as algorithmic trading or fraud detection. Python’s scikit-learn library and R’s caret package offer extensive tools for implementing these algorithms. For example, a credit card company might use a neural network to predict fraudulent transactions based on transaction history and user behavior. The success of these models, however, relies heavily on data quality and careful model tuning to avoid overfitting and ensure robust predictions.

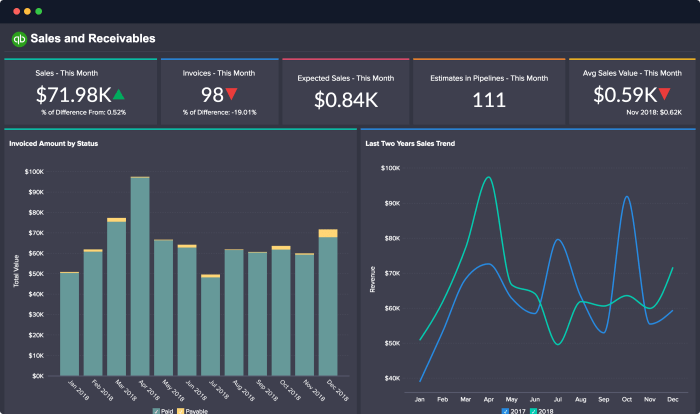

Reporting and Visualization of Results

Turning mountains of financial data into insightful, digestible nuggets requires more than just number-crunching; it demands a flair for the dramatic (in a data-driven, of course, way). Effective communication of your findings is the key to unlocking the true value of your analysis, ensuring your hard work doesn’t end up gathering dust on a forgotten hard drive. This section explores how to transform complex financial insights into compelling narratives, using visuals that even your least spreadsheet-savvy colleague can understand and appreciate.

Effective communication of financial data analysis findings relies on a potent blend of visual appeal and clear, concise writing. Think of it as presenting a captivating story, where the data points are the characters and the visualizations are the cinematic special effects. By employing appropriate techniques, we can transform complex financial information into easily understandable narratives, ensuring our insights are not only understood but also acted upon.

Data Visualization Techniques

Visualizations are the superheroes of data communication. They transform complex data into easily digestible narratives, helping audiences quickly grasp key insights. A well-chosen chart can speak volumes where pages of text might fail. For example, a line chart elegantly illustrates trends over time, such as the growth of a company’s revenue. A bar chart effectively compares different categories, like the performance of various investment portfolios. And don’t forget the power of a well-constructed pie chart to showcase the proportions of different components within a whole, such as the allocation of assets in a diversified portfolio. Using the right chart type for the right data dramatically enhances understanding and engagement.

Examples of Compelling Data Visualizations

Let’s imagine we’ve analyzed a company’s financial performance over the past five years. A compelling dashboard might include:

| Metric | Chart Type | Description | Insight |

|---|---|---|---|

| Revenue Growth | Line Chart | Shows revenue over five years, highlighting trends and fluctuations. | Steady growth with a minor dip in Year 3. |

| Profit Margin | Bar Chart | Compares profit margins across different product lines. | Product Line A consistently outperforms others. |

| Expense Breakdown | Pie Chart | Shows the proportion of expenses allocated to different categories (e.g., marketing, R&D, salaries). | Marketing expenses represent a significant portion of the total. |

Importance of Clear and Concise Reporting for Different Audiences

Tailoring your report to your audience is crucial. A detailed technical report for senior management might include complex statistical models and nuanced interpretations, whereas a summary for the board of directors would prioritize key findings and actionable recommendations, presented in a clear and concise manner. Remember, different audiences have different levels of financial literacy and varying degrees of attention spans. Keep it simple, keep it relevant, and keep it interesting!

Sample Financial Analysis Report

This hypothetical report summarizes a financial analysis of “Acme Corp.” The analysis focused on revenue trends, profitability, and expense management.

| Acme Corp. Financial Analysis Summary |

|---|

| Key Findings: Acme Corp. experienced steady revenue growth over the past five years, with a slight dip in 2023. Profit margins remain healthy, though could be improved by optimizing marketing expenses. Further analysis suggests opportunities for cost reduction in administrative overhead. |

| Year | Revenue ($M) | Profit Margin (%) | Marketing Expenses ($M) |

|---|---|---|---|

| 2022 | 100 | 20 | 15 |

| 2023 | 95 | 18 | 16 |

| 2024 | 105 | 22 | 14 |

| 2025 | 115 | 23 | 15 |

| 2026 | 125 | 25 | 17 |

Case Studies: Financial Data Analytics Tools Review

Now that we’ve covered the exciting theoretical aspects of financial data analytics, let’s dive headfirst into the exhilarating world of real-world applications! Think of this section as the “behind-the-scenes” peek at how these tools are actually used to solve real problems, make mountains of money (or at least, avoid losing it), and generally make the world of finance a slightly less chaotic place. Prepare for some seriously impressive case studies that will make you wonder why you didn’t become a data analyst sooner.

These examples will showcase the power of financial data analytics across diverse industries, highlighting both the triumphs and the inevitable (but often hilarious) challenges. We’ll explore how these tools are used to predict market trends, detect fraud, manage risk, and even personalize financial advice – because let’s face it, one-size-fits-all financial advice is about as effective as a screen door on a submarine.

Financial Data Analytics in Banking, Financial Data Analytics Tools Review

The banking sector is a fertile ground for data analytics, employing tools to enhance everything from fraud detection to customer relationship management. Imagine a world where your bank actually *understands* your spending habits instead of just sending you unsolicited credit card offers. That’s the power of data analytics.

For example, a major bank might use machine learning algorithms to identify potentially fraudulent transactions in real-time. By analyzing transaction patterns, location data, and even the time of day, the system can flag suspicious activity and prevent significant financial losses. This often involves tools like SAS, R, and Python, combined with specialized fraud detection software. The benefits are obvious: reduced losses, improved customer trust, and a significantly less stressful day for fraud investigators. Challenges, however, include the ever-evolving tactics of fraudsters and the need for continuous model updates to stay ahead of the curve. It’s a constant arms race, but one where data analytics is proving to be a formidable weapon.

Financial Data Analytics in Investment Management

In the high-stakes world of investment management, data analytics is no longer a luxury; it’s a necessity. Sophisticated algorithms are used to analyze vast amounts of market data, identify investment opportunities, and manage risk. Think of it as having a super-powered crystal ball (powered by algorithms, not magic).

A hedge fund, for instance, might utilize advanced statistical modeling techniques to predict stock prices or identify undervalued assets. Tools like Bloomberg Terminal, Python with libraries like Pandas and Scikit-learn, and specialized quantitative analysis software are often employed. The benefits include higher returns, optimized portfolio construction, and improved risk management. Challenges include the inherent volatility of the market, the difficulty of predicting future events with perfect accuracy, and the ever-present risk of “black swan” events – those unpredictable occurrences that can throw even the most sophisticated models off course.

Financial Data Analytics in Insurance

The insurance industry, often perceived as a bit staid, is experiencing a data-driven revolution. From risk assessment to claims processing, data analytics is transforming how insurers operate. Forget those tedious paperwork-filled days; the future of insurance is algorithmic.

An insurance company might use predictive modeling to assess the risk of a particular policyholder, leading to more accurate pricing and better risk management. This might involve analyzing factors such as age, location, driving history (for car insurance), and even social media activity (yes, really!). Tools like SQL, R, and specialized actuarial software are commonly used. The benefits include improved underwriting accuracy, reduced claim costs, and the ability to offer more personalized and competitive insurance products. Challenges include the need for large, high-quality datasets, the ethical considerations of using personal data, and the potential for algorithmic bias – something that needs to be carefully addressed to ensure fairness and avoid unintended consequences.

Case Study Summary

| Industry | Tool(s) Used | Problem Solved | Outcome |

|---|---|---|---|

| Banking | SAS, R, Python, Fraud Detection Software | Fraudulent Transaction Detection | Reduced financial losses, improved customer trust |

| Investment Management | Bloomberg Terminal, Python (Pandas, Scikit-learn), Quantitative Analysis Software | Stock Price Prediction, Portfolio Optimization | Higher returns, improved risk management |

| Insurance | SQL, R, Actuarial Software | Risk Assessment, Claims Processing | Improved underwriting accuracy, reduced claim costs |

Security and Ethical Considerations

Navigating the world of financial data analytics is like exploring a high-stakes casino: the potential rewards are immense, but the risks of losing everything – reputation, data, even your job – are equally substantial. This section delves into the crucial aspects of security and ethical considerations, ensuring you don’t end up playing poker with loaded dice.

Data security and privacy are paramount in financial data analytics. We’re not just talking about numbers here; we’re dealing with sensitive information that could ruin lives if mishandled. A single breach could expose confidential client data, leading to identity theft, financial fraud, and a massive PR disaster. The consequences are far-reaching, impacting individuals, institutions, and the overall trust in the financial system. Think of it as guarding Fort Knox – except instead of gold, you’re protecting highly sensitive personal and financial data.

Data Security Measures

Implementing robust security measures is not merely a good idea; it’s a necessity. Failure to do so can result in significant legal and financial penalties, as well as irreparable damage to your organization’s reputation. Consider this a preventative measure, akin to installing a high-tech alarm system in your house – you hope you’ll never need it, but it’s far better to have it and not need it than to need it and not have it.

- Access Control: Implement strict access control measures, using role-based access to limit access to sensitive data only to authorized personnel. This is like having a keycard system for your building – only those with the right credentials can enter.

- Data Encryption: Encrypt data both in transit and at rest. This is like using a secret code to protect your messages – even if someone intercepts them, they won’t be able to understand the content.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify vulnerabilities and ensure that your security measures are effective. This is like having a security guard regularly patrolling your building – they can identify and address potential threats before they become a problem.

- Incident Response Plan: Develop and regularly test a comprehensive incident response plan to handle data breaches and other security incidents. This is your emergency plan, the equivalent of having a fire escape route clearly marked and practiced.

- Employee Training: Provide regular security awareness training to employees to educate them about best practices for data security. This is like conducting regular fire drills – everyone knows what to do in case of an emergency.

Ethical Implications of Financial Data Analytics

The ethical landscape of financial data analytics is a minefield. Using powerful analytical tools responsibly requires careful consideration of potential biases, fairness, and transparency. Failing to do so can lead to discriminatory practices, unfair treatment of individuals, and erosion of public trust. It’s akin to walking a tightrope – one wrong step could lead to a catastrophic fall.

Best Practices for Responsible Use

Responsible use of financial data analytics tools requires a commitment to transparency, fairness, and accountability. This means ensuring that your analyses are free from bias, that your results are accurately represented, and that you are accountable for the impact of your work. Think of it as being a responsible scientist – your results should be verifiable, reproducible, and ethically sound.

- Bias Mitigation: Actively identify and mitigate biases in your data and algorithms to ensure fair and equitable outcomes. This involves critically examining your data for any inherent biases and employing techniques to correct them.

- Transparency and Explainability: Strive for transparency and explainability in your analyses. This means making your methods and results clear and understandable to others, allowing for scrutiny and validation.

- Data Privacy Protection: Prioritize data privacy protection throughout the entire data analytics lifecycle. This includes anonymization, pseudonymization, and other techniques to protect the identity of individuals.

- Accountability and Oversight: Establish mechanisms for accountability and oversight to ensure that your work is conducted ethically and responsibly. This could include regular reviews by ethics committees or independent auditors.

Final Review

In conclusion, navigating the landscape of financial data analytics tools can feel like traversing a dense jungle, but with the right map (this review!), the journey becomes much more manageable. Remember, the perfect tool depends on your specific needs and expertise. By carefully considering the factors discussed – functionality, cost, ease of use, and security – you can confidently select a tool that empowers you to extract valuable insights from your financial data and make data-driven decisions with confidence. Happy analyzing!

FAQ Corner

What is the best financial data analytics tool for beginners?

There’s no single “best” tool, but user-friendly options like Google Sheets or Excel offer a gentle introduction to data analysis before progressing to more advanced software.

How much does financial data analytics software typically cost?

Costs vary wildly, from free (open-source options and free tiers of cloud-based services) to tens of thousands of dollars annually for enterprise-level solutions. Pricing often depends on features, user licenses, and data storage capacity.

Are there any free financial data sources I can use?

Yes! Many government agencies and financial institutions provide free access to financial data. However, be aware that the quality and accessibility may vary.

What are the ethical considerations of using financial data analytics?

Ethical considerations include data privacy, bias in algorithms, and the potential for misuse of insights for unfair advantage or discriminatory practices. Responsible data handling is paramount.