Financial Forecasting Methods Comparison: Predicting the future of your finances might sound like gazing into a crystal ball, but it’s actually a serious business with surprisingly sophisticated (and sometimes hilariously complicated) methods. This exploration delves into the fascinating world of qualitative and quantitative forecasting techniques, revealing their strengths, weaknesses, and the occasional unexpected quirk. We’ll journey from the wisdom of crowds (Delphi method, anyone?) to the cold, hard numbers of regression analysis, all in the pursuit of better financial predictions.

From simple moving averages to the mind-bending complexities of ARIMA models, we’ll unpack the tools used to peer into the financial future. We’ll uncover how to choose the right method for your needs, combine different approaches for a more robust forecast, and even learn how to gracefully handle when your predictions go spectacularly wrong (it happens to the best of us!). Prepare for a rollercoaster ride through the world of financial forecasting – buckle up, it’s going to be a wild ride!

Introduction to Financial Forecasting Methods

Financial forecasting, my friends, is not just some crystal ball gazing exercise for fortune tellers. It’s the lifeblood of sound business decision-making. Whether you’re a seasoned CEO or a budding entrepreneur, understanding where your money is going (and, more importantly, *where it’s coming from*) is crucial for survival and, dare we say it, *thriving*. Accurate forecasting allows businesses to secure funding, manage resources efficiently, and navigate the ever-shifting sands of the market with a level of confidence that borders on arrogance (in a good way, of course).

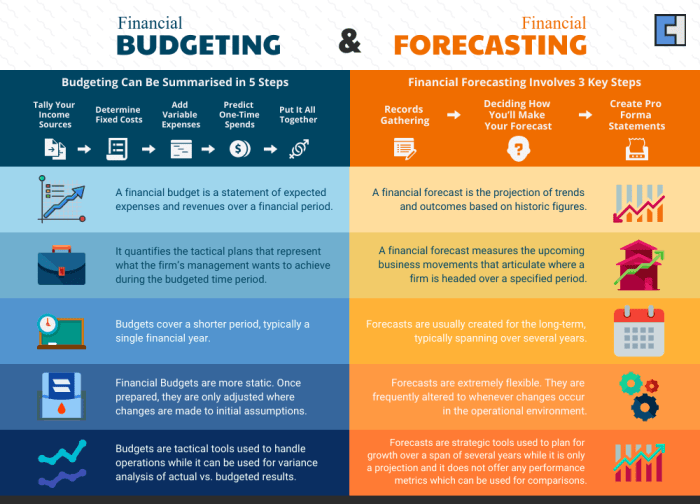

Financial forecasting methods can be broadly categorized into qualitative and quantitative approaches. Qualitative methods rely on expert opinions, surveys, and gut feelings (yes, even those have a place!), while quantitative methods use historical data and statistical models to project future outcomes. Think of it like this: qualitative methods are the seasoned chef tasting the soup, while quantitative methods are the meticulous scientist analyzing the soup’s chemical composition. Both are valuable, and often used in conjunction.

Categorization of Financial Forecasting Methods

The choice of forecasting method depends heavily on the specific situation, the data available, and the desired level of accuracy. A small bakery might rely on simple trend analysis, while a multinational corporation might employ sophisticated econometric models. The beauty (and the terror) lies in the variety.

| Method Name | Description | Advantages | Disadvantages |

|---|---|---|---|

| Qualitative Methods (e.g., Delphi Method, Market Research) | Relies on expert judgment and subjective assessments. | Useful when historical data is scarce or unreliable; incorporates expert knowledge and insights. | Highly subjective; prone to bias; difficult to quantify the level of uncertainty. |

| Time Series Analysis (e.g., Moving Average, Exponential Smoothing) | Analyzes historical data to identify patterns and trends. | Relatively simple to implement; requires minimal data; good for short-term forecasting. | Assumes that past trends will continue; may not be accurate during periods of significant change. |

| Regression Analysis | Identifies relationships between variables to predict future values. | Can incorporate multiple variables; provides statistical measures of accuracy. | Requires significant data; assumptions about the relationships between variables may not hold. |

| Causal Forecasting (e.g., Econometric Models) | Explores the causal relationships between economic factors and financial variables. | Provides a deeper understanding of underlying factors; can be used for long-term forecasting. | Complex and computationally intensive; requires specialized knowledge; data requirements can be substantial. |

Qualitative Forecasting Methods

Predicting the future of finances isn’t just about crunching numbers; sometimes, it’s about tapping into the wisdom of crowds, the whispers of the market, and the gut feelings of experts. Qualitative forecasting methods, while less precise than their quantitative counterparts, offer valuable insights that can significantly enhance the accuracy of financial projections, especially when dealing with factors that defy simple numerical modeling. Think of them as the seasoned detectives of the financial world, piecing together clues to paint a picture of what might lie ahead.

The Delphi Method: Harnessing Collective Wisdom

The Delphi method is a structured communication technique used to gather expert opinions on a particular topic. It involves a series of questionnaires distributed to a panel of experts, with feedback from previous rounds incorporated into subsequent questionnaires. This iterative process helps to refine opinions and reach a consensus, even if the initial views are widely divergent. In financial forecasting, the Delphi method can be invaluable for predicting long-term trends, assessing the impact of disruptive technologies, or gauging the potential success of new products or services. Imagine using it to forecast the long-term impact of a new cryptocurrency on traditional banking – the collective wisdom of experts in finance, technology, and economics could offer a much richer perspective than any single algorithm.

Market Research: Listening to the Marketplace

Market research involves systematically gathering and analyzing data about consumer preferences, buying habits, and market trends. This information is crucial for forecasting sales, pricing strategies, and overall market demand. In financial forecasting, market research helps to ground projections in real-world data, reducing the risk of relying on overly optimistic or pessimistic assumptions. For example, a company launching a new product might conduct market research to gauge consumer interest and estimate potential sales figures. This data would then be incorporated into the financial projections, providing a more realistic picture of the company’s financial future.

Expert Opinions: The Value of Informed Intuition

Expert opinions, often integrated through methods like the Delphi technique, play a critical role in qualitative forecasting. Experienced professionals in relevant fields can provide valuable insights based on their knowledge, experience, and intuition. These opinions can be particularly helpful when dealing with complex or uncertain situations where quantitative data is limited or unreliable. For instance, an expert economist’s assessment of the potential impact of a global political event on the stock market could provide invaluable information for financial forecasting. However, it’s crucial to remember that even expert opinions are subject to bias and uncertainty.

Limitations of Solely Qualitative Methods

While qualitative methods provide valuable context and insights, relying solely on them for financial forecasting can be risky. Qualitative methods lack the precision and objectivity of quantitative methods, making it difficult to quantify uncertainty and establish confidence intervals. Over-reliance on expert opinions, without rigorous testing or validation, can also lead to biased or inaccurate forecasts. For example, if a company bases its financial projections solely on the optimistic views of its executives, without considering external market factors or potential risks, it could lead to unrealistic expectations and financial difficulties. A balanced approach, integrating both qualitative and quantitative methods, is generally recommended for robust and reliable financial forecasting.

Quantitative Forecasting Methods

Predicting the future of finance is like predicting the weather in Scotland – you can make an educated guess, but be prepared for a sudden downpour of unexpected events. Quantitative forecasting methods offer a more structured approach than their qualitative counterparts, relying on hard data and mathematical models to peer into the crystal ball (or, more realistically, the spreadsheet). These methods are our trusty steeds in the wild west of financial prediction, offering a level of precision that gut feeling simply can’t match.

Time Series Analysis versus Causal Modeling

Time series analysis focuses solely on the historical data of a variable to predict its future values. It’s like studying the ripples in a pond to predict the next splash – you only look at the water’s movements, ignoring the frog that might be responsible. Causal modeling, on the other hand, attempts to identify the factors that *cause* changes in the variable. This is like investigating the frog directly – you’re interested in its size, jumping ability, and general frog-like behaviour to predict the next splash. While time series is simpler and often sufficient, causal modeling offers a deeper understanding and potentially more accurate predictions when you can identify relevant causal factors. The choice depends on the data available and the desired level of power.

Examples of Time Series Models

Several powerful time series models exist, each with its own strengths and quirks. Choosing the right model is like choosing the right tool for the job – a hammer won’t help you build a skyscraper.

- Moving Average: This simple model smooths out fluctuations in the data by averaging values over a specific period. Imagine it as averaging your daily mood over a week – it gives you a smoother picture than looking at your mood swings day by day. A longer averaging period leads to smoother forecasts, but it also lags behind recent changes.

- Exponential Smoothing: This model gives more weight to recent data points, making it more responsive to recent trends. Think of it as remembering your most recent experiences more vividly than those from long ago. Different types of exponential smoothing (simple, double, triple) exist, offering varying levels of complexity and responsiveness.

- ARIMA (Autoregressive Integrated Moving Average): This is a more sophisticated model that captures both autocorrelations (relationships between past values) and moving average components. It’s the Swiss Army knife of time series models, capable of handling a wide range of data patterns, but it requires careful parameter estimation and can be computationally intensive.

Building a Regression Model for Financial Forecasting

Building a regression model is like constructing a detailed map of the financial landscape. The process involves several key steps:

- Variable Selection: Identify variables that are likely to influence the target variable (e.g., stock price, sales revenue). This requires careful consideration of economic theory, prior research, and data availability. It’s like choosing the right ingredients for a recipe – you wouldn’t use flour for a soup.

- Model Specification: Choose the functional form of the regression equation (linear, logarithmic, polynomial, etc.). This depends on the relationship between the variables. This is akin to choosing the cooking method – boiling, frying, baking – each works best for different ingredients.

- Model Estimation: Use statistical software to estimate the model parameters (coefficients). This step involves finding the line of best fit through the data points. It’s like finding the perfect seasoning for your recipe.

- Model Evaluation: Assess the model’s goodness of fit (e.g., R-squared, adjusted R-squared) and check for violations of the model assumptions (e.g., normality, homoscedasticity). This is like taste-testing your dish to ensure it’s perfect.

Hypothetical Scenario: Exponential Smoothing for Stock Price Prediction

Let’s say we’re forecasting the daily closing price of a highly volatile tech stock. Given the rapid changes in investor sentiment and market conditions, a simple moving average model would be too slow to react to new information. In this scenario, exponential smoothing, particularly a double exponential smoothing model to capture trends, would be most appropriate because it gives greater weight to recent price movements, providing a more responsive and potentially more accurate forecast. A simple moving average would lag behind the fast-paced changes in the stock price, while a more complex model like ARIMA might be overkill given the volatility and potential for sudden shifts.

Time Series Analysis in Detail

Time series analysis, my friends, is the art of peering into the crystal ball of data, not with mystical incantations, but with statistical rigor. We’re not predicting the future with certainty (sorry, no lottery numbers here!), but rather identifying patterns and trends within historical data to make informed forecasts. This involves understanding the quirks of our data, like its tendency to wander aimlessly or its penchant for sudden, dramatic shifts.

Stationarity in Time Series Data

Stationarity, in the world of time series, refers to data that doesn’t have a wandering mind. A stationary time series has a constant mean and variance over time, and its autocovariance doesn’t depend on time. Imagine a perfectly level river; that’s stationarity. In contrast, a non-stationary time series is like a river prone to floods and droughts—its behavior changes over time. Why is this important? Because many powerful forecasting methods, like ARIMA models, require stationary data to function properly. Trying to use them on non-stationary data is like trying to build a castle on shifting sand – a recipe for disaster. Transformations like differencing (subtracting consecutive data points) are often used to achieve stationarity, taming those unruly rivers into predictable streams.

Smoothing Techniques: Simple and Weighted Moving Averages

Smoothing techniques are like applying a gentle filter to our data, removing the noise and revealing the underlying trend. Simple and weighted moving averages are two popular methods. Let’s illustrate with a hypothetical example of monthly sales data:

| Period | Data (Sales) | Simple Moving Average (3-period) | Weighted Moving Average (3-period) |

|---|---|---|---|

| January | 100 | – | – |

| February | 110 | – | – |

| March | 120 | 110 | 113.33 |

| April | 130 | 120 | 123.33 |

| May | 140 | 130 | 133.33 |

| June | 150 | 140 | 143.33 |

The simple moving average simply averages the data over a specified period (here, 3 months). The weighted moving average assigns different weights to each data point within the period, typically giving more weight to more recent data. For example, a common weighting scheme might be 0.5, 0.3, and 0.2 for the most recent, second most recent, and oldest data points respectively. The weighted average is often more responsive to recent changes in the data.

Assumptions Underlying ARIMA Models

ARIMA (Autoregressive Integrated Moving Average) models are the heavyweights of time series forecasting. However, they come with baggage – assumptions that must be met for reliable results. These include:

* Stationarity: As discussed earlier, the data needs to be relatively stable over time.

* Linearity: The relationship between past and future values should be linear.

* Constant Variance: The variability of the data should remain consistent over time.

* No Autocorrelation in Residuals: After fitting the model, the leftover errors (residuals) should be random and uncorrelated.

Selecting Appropriate ARIMA Model Parameters

Choosing the right ARIMA model (p, d, q) – where p represents the autoregressive order, d the degree of differencing, and q the moving average order – can feel like navigating a minefield. There’s no magic formula, but best practices include:

* Autocorrelation and Partial Autocorrelation Functions (ACF and PACF): These plots help identify potential values for p and q by examining the correlations between data points at different lags.

* Information Criteria: Metrics like AIC (Akaike Information Criterion) and BIC (Bayesian Information Criterion) help compare different ARIMA models and select the one that best balances model fit and complexity. Lower values are generally preferred.

* Diagnostic Checks: After fitting a model, it’s crucial to check the residuals for autocorrelation and normality to ensure the model assumptions are met.

Causal Modeling Techniques

Predicting the future is a tricky business, like trying to catch a greased piglet. While time series analysis looks at the past to predict the future, causal modeling takes a more Sherlock Holmes-ian approach, seeking to uncover the *why* behind the numbers. It’s about identifying the relationships between different variables to understand what drives financial performance, not just what it’s done historically. This allows for a more nuanced and potentially more accurate forecast, especially when dealing with significant changes in the market.

Causal modeling uses statistical techniques to model the relationship between a dependent variable (what we want to predict) and one or more independent variables (factors that influence the dependent variable). Multiple linear regression is a popular method, but it’s not the only game in town. Other techniques, like vector autoregression (VAR) and structural equation modeling (SEM), offer different strengths depending on the complexity of the relationships involved. Choosing the right tool is crucial, like choosing the right weapon for a financial ninja warrior.

Multiple Linear Regression and Other Causal Models

Multiple linear regression is the workhorse of causal modeling, elegantly expressing the relationship between a dependent variable and several independent variables using a linear equation. Other methods, such as VAR models, are better suited for analyzing the interdependencies between multiple time series variables, useful when predicting variables that influence each other reciprocally. SEM, on the other hand, is particularly powerful for testing complex relationships between latent variables (variables that cannot be directly measured). The choice of the most suitable method depends on the specific forecasting problem and the nature of the data available. For example, if you’re predicting company profits based on sales, marketing spend, and economic growth, multiple linear regression might be perfectly adequate. However, if you are attempting to model the intricate interplay of interest rates, inflation, and economic growth, a VAR model might be a more appropriate choice.

Challenges of Identifying and Measuring Relevant Independent Variables

Identifying the right independent variables is like searching for the Holy Grail – challenging, but potentially rewarding. Omitting a crucial variable can lead to biased and inaccurate predictions, while including irrelevant ones can add noise and complexity, obscuring the true relationships. Measuring these variables accurately is equally important. Data quality issues, such as missing values or measurement errors, can significantly impact the reliability of the model. For instance, trying to predict housing prices without considering interest rates would be like navigating a maze blindfolded. Similarly, using inaccurate or incomplete sales data will lead to flawed predictions of future revenue.

Model Diagnostics and Residual Analysis

Once you’ve built your model, don’t declare victory just yet. Thorough model diagnostics are crucial to ensure the model’s accuracy and reliability. Residual analysis, which examines the differences between the actual and predicted values, is a key component of this process. Large or patterned residuals indicate problems with the model, such as omitted variables or non-linear relationships. Think of residual analysis as a quality control check for your forecasting model. Ignoring it is like ignoring a flashing warning light on your dashboard – you might end up in a ditch.

Hypothetical Regression Model Equation

Let’s imagine we want to predict a company’s quarterly revenue (Revenue). We might propose a model like this:

Revenue = β0 + β1*MarketingSpend + β2*EconomicGrowth + β3*CompetitorSales + ε

Where:

* Revenue: The company’s quarterly revenue (dependent variable).

* MarketingSpend: The company’s marketing expenditure in the quarter (independent variable, expected positive impact).

* EconomicGrowth: The rate of economic growth in the quarter (independent variable, expected positive impact).

* CompetitorSales: The total sales of the company’s main competitors in the quarter (independent variable, expected negative impact).

* β0: The intercept (the revenue when all independent variables are zero).

* β1, β2, β3: Regression coefficients representing the impact of each independent variable on revenue.

* ε: The error term, representing the unpredictable variations in revenue.

This hypothetical model suggests that higher marketing spending and economic growth will lead to higher revenue, while higher competitor sales will negatively affect the company’s revenue. The coefficients (β1, β2, β3) quantify the strength of these relationships. Naturally, a real-world model would be far more complex, potentially including many more variables and interactions.

Combining Forecasting Methods

Predicting the future of finance is like predicting the weather in Scotland – you can have a pretty good guess, but you’re also likely to get soaked. Therefore, relying on a single forecasting method is akin to navigating a financial storm in a rowboat made of soggy biscuits. A more robust approach involves combining the strengths of different methods, creating a hybrid forecast that’s far more resilient and accurate. This synergistic approach, blending the art of qualitative judgment with the science of quantitative analysis, offers a fascinatingly effective way to improve predictive power.

The benefits of combining qualitative and quantitative forecasting methods are numerous and surprisingly delightful. Qualitative methods, such as expert opinions and market research, provide valuable insights into the “soft” factors that influence financial outcomes, such as consumer confidence or shifts in regulatory environments. Quantitative methods, on the other hand, are the data-crunching powerhouses, analyzing historical trends and statistical relationships to project future values. By combining these approaches, we gain a more comprehensive understanding of the forces at play, mitigating the inherent weaknesses of each individual method. Think of it as getting the best of both worlds – the wisdom of experience and the precision of numbers.

Hybrid Forecasting: A Practical Example

Let’s imagine we’re forecasting sales for a new type of self-folding laundry basket (a revolutionary product, naturally). Quantitative methods, such as time series analysis of similar product launches and regression analysis based on marketing spend, might predict sales of 10,000 units. However, qualitative methods, incorporating expert opinions from sales managers and market research indicating strong consumer interest, suggest a higher figure – perhaps 15,000 units. Instead of choosing one prediction over the other, a hybrid approach would integrate both. A weighted average, perhaps assigning 60% weight to the quantitative forecast and 40% to the qualitative forecast (based on the reliability of each method’s data and expertise), would yield a more balanced prediction of 12,000 units. This is significantly more nuanced and likely closer to the actual result than either method alone.

Weighting Different Forecasting Methods

Determining the appropriate weights for different forecasting methods is crucial for a successful hybrid approach. This isn’t a matter of arbitrary decision-making; rather, it requires careful consideration of several factors. The accuracy and reliability of historical data used in quantitative methods play a significant role. The expertise and experience of the individuals providing qualitative inputs also influence the weighting. For example, if the quantitative model has consistently shown high accuracy in the past, it might receive a higher weight. Conversely, if the qualitative input comes from a highly experienced and reputable industry expert, it might deserve more consideration. Statistical measures, such as mean absolute error (MAE) or root mean squared error (RMSE), can help assess the past performance of quantitative models and inform the weighting process. Essentially, it’s a process of carefully evaluating the evidence and assigning weights that reflect the relative confidence in each method.

Reconciling Discrepancies Between Forecasting Methods, Financial Forecasting Methods Comparison

When different forecasting methods produce significantly different results, it’s not a sign of failure; rather, it’s an opportunity for deeper investigation. These discrepancies often highlight areas of uncertainty or conflicting information. The first step is to identify the source of the divergence. Is it due to differing assumptions, limitations in the data, or conflicting external factors? A thorough analysis of the assumptions and data used by each method is essential. A sensitivity analysis, examining how changes in key assumptions affect the forecast, can also be valuable. Once the sources of discrepancy are understood, they can be addressed by refining the methods, gathering additional data, or incorporating new insights. The goal is not necessarily to eliminate the discrepancies entirely, but rather to understand their origins and incorporate this understanding into a more robust and informed overall forecast. Sometimes, the best solution is to simply acknowledge the uncertainty and present a range of possible outcomes, rather than a single point estimate.

Evaluating Forecasting Accuracy: Financial Forecasting Methods Comparison

Predicting the future is a tricky business, even for seasoned financial wizards. While we’ve explored various forecasting methods, the crucial question remains: how accurate are our predictions? This section delves into the fascinating world of evaluating forecasting accuracy, providing you with the tools to judge the effectiveness of your chosen method and avoid making decisions based on wishful thinking rather than sound data.

Evaluating the accuracy of your financial forecasts is paramount. Choosing the right metric and interpreting the results correctly are vital for making informed business decisions. Incorrectly assessing forecast accuracy can lead to disastrous consequences, from missed investment opportunities to overstocked warehouses. Therefore, understanding and applying appropriate accuracy measures is not just beneficial—it’s essential for survival in the competitive world of finance.

Measures of Forecasting Accuracy

Several metrics exist to quantify forecasting accuracy. Each has its strengths and weaknesses, and the best choice often depends on the specific context and the nature of the data. Let’s explore some of the most commonly used measures, remembering that no single metric tells the whole story.

- Mean Absolute Error (MAE): This measures the average absolute difference between the forecasted and actual values. It’s easy to understand and interpret, giving a clear picture of the average magnitude of forecast errors. The formula is:

MAE = (1/n) * Σ|Actuali – Forecasti|

where ‘n’ is the number of periods and ‘i’ represents each period.

- Root Mean Squared Error (RMSE): RMSE is similar to MAE, but it squares the errors before averaging them and then takes the square root. This gives more weight to larger errors, making it more sensitive to outliers. The formula is:

RMSE = √[(1/n) * Σ(Actuali – Forecasti)2]

Interpreting Accuracy Metrics

Lower values of MAE and RMSE indicate higher accuracy. For example, an MAE of 5 means that, on average, your forecasts are off by 5 units. However, the interpretation depends heavily on the scale of the data. An MAE of 5 is excellent if you’re forecasting daily sales of a small shop but disastrous if you’re forecasting annual revenue for a multinational corporation. Therefore, always consider the context and magnitude of your data when interpreting these metrics.

Calculating Accuracy Metrics: A Hypothetical Example

Let’s illustrate the calculation with a simple example. Imagine we’re forecasting monthly sales for a bakery. The following table shows the actual and forecasted sales for four months, along with the calculated MAE and RMSE.

| Period | Actual Value | Forecasted Value | MAE | RMSE |

|---|---|---|---|---|

| Month 1 | 100 | 95 | 5 | 5 |

| Month 2 | 110 | 115 | 5 | 5 |

| Month 3 | 120 | 125 | 5 | 5 |

| Month 4 | 105 | 100 | 5 | 5 |

In this example, MAE = (5+5+5+5)/4 = 5 and RMSE = √[(25+25+25+25)/4] = 5. This shows a consistent average error of 5 units.

Limitations of Quantitative Accuracy Measures

While quantitative measures like MAE and RMSE are valuable, relying solely on them can be misleading. They don’t capture qualitative aspects such as the direction of errors (consistent overestimation or underestimation) or the potential impact of individual large errors on business decisions. For instance, consistently underestimating demand could lead to lost sales, while consistently overestimating it could result in increased inventory costs. A perfectly accurate average might mask these crucial details.

Final Thoughts

Ultimately, mastering financial forecasting isn’t about finding the *perfect* method, but rather understanding the strengths and limitations of each approach. By thoughtfully combining qualitative insights with the power of quantitative analysis, businesses can build more resilient, accurate, and – dare we say – *fun* financial predictions. So, ditch the crystal ball and embrace the power of data-driven decision making; your wallet (and your sanity) will thank you.

Essential FAQs

What if my forecast is wildly inaccurate?

Don’t panic! Inaccurate forecasts are a learning opportunity. Analyze what went wrong, refine your methodology, and remember that even the most sophisticated models are not crystal balls. A little humility goes a long way in forecasting.

How much data do I need for accurate forecasting?

The amount of data needed depends heavily on the method used. Simpler methods might work with less data, while more complex models (like ARIMA) require significantly more historical information for reliable results. More data is generally better, but data quality is paramount.

Can I use financial forecasting for personal finance?

Absolutely! While the techniques are often applied to businesses, the principles of forecasting can be adapted for personal budgeting and financial planning. Simpler methods like moving averages can be quite effective for personal finance forecasting.

Are there free tools available for financial forecasting?

Yes, many spreadsheet programs (like Excel or Google Sheets) offer built-in functions for basic time series analysis and regression. There are also free and open-source statistical software packages available online that provide more advanced capabilities.