Mastering financial modeling is crucial for making sound business decisions. This guide delves into the essential best practices, encompassing data integrity, model design, assumption management, effective communication, and rigorous auditing. From understanding the importance of data validation to leveraging sensitivity analysis and employing clear documentation strategies, we’ll equip you with the knowledge to build robust, reliable, and insightful financial models.

We will explore various modeling techniques, including discounted cash flow (DCF) analysis and Monte Carlo simulations, while also highlighting the critical role of choosing the right software and tools to streamline your workflow. By adhering to these best practices, you can significantly enhance the accuracy, transparency, and overall effectiveness of your financial modeling efforts, leading to more informed and confident decision-making.

Data Integrity and Validation

Accurate and reliable data is the cornerstone of any effective financial model. Garbage in, garbage out is a maxim that holds particularly true in this context. Data integrity and validation are therefore crucial steps, ensuring the model’s outputs are trustworthy and can be used for informed decision-making. Without rigorous validation, even the most sophisticated model can produce misleading results.

Importance of Data Cleansing and Error Detection

Data cleansing and error detection are essential for maintaining data integrity. Cleansing involves identifying and correcting or removing inaccurate, incomplete, irrelevant, duplicated, or improperly formatted data. Error detection involves using various techniques to pinpoint anomalies and inconsistencies within the dataset. Failure to address these issues can lead to significant errors in the model’s projections, potentially resulting in poor investment decisions, inaccurate budgeting, or flawed financial planning. For example, a single incorrect data point in a revenue projection could lead to a substantial miscalculation of profitability, impacting investment strategies and resource allocation.

Examples of Common Data Errors and Their Impact

Several common data errors can significantly impact model results. These include: typographical errors (e.g., entering “10000” instead of “1000”), inconsistent units (e.g., mixing USD and EUR), missing values (e.g., unfilled cells in a spreadsheet), duplicate entries, and outliers (e.g., unusually high or low values). The impact of these errors can range from minor discrepancies to completely inaccurate model outputs. For instance, a simple typo in a key financial ratio could drastically alter the model’s valuation of a company. Similarly, missing data on key expenses could lead to an overly optimistic profit projection.

Methods for Validating Data Sources and Ensuring Consistency

Validating data sources involves verifying the reliability and accuracy of the information used. This can be done by comparing data from multiple sources, checking for inconsistencies, and evaluating the credibility of the source itself. Ensuring consistency involves establishing a standardized format for all data inputs, using clear and consistent naming conventions, and regularly reviewing the data for errors and anomalies. Techniques such as cross-referencing data with external databases or industry benchmarks can help improve the accuracy and reliability of the model’s inputs. Employing data dictionaries and metadata to document data sources and definitions also helps maintain consistency.

Data Validation Checklist

A comprehensive data validation checklist should be used to ensure data quality throughout the modeling process. This checklist should include steps to verify the accuracy, completeness, and consistency of the data.

| Step | Description | Example | Impact of Failure |

|---|---|---|---|

| Source Verification | Confirm the reliability of the data source. | Verify financial statements from a reputable auditing firm. | Using unreliable data leads to inaccurate model outputs. |

| Data Type Validation | Check if data types match expected formats (e.g., numbers, dates). | Ensure revenue figures are numeric and not text. | Incorrect data types can cause calculation errors. |

| Range Checks | Verify data falls within reasonable limits. | Check if growth rates are within a plausible range. | Unrealistic values can skew model results. |

| Consistency Checks | Compare data from multiple sources for discrepancies. | Compare revenue figures from income statements and sales reports. | Inconsistencies can lead to inaccurate projections. |

Comparison of Data Validation Techniques

| Technique | Description | Advantages | Disadvantages |

|---|---|---|---|

| Data Cleansing | Identifying and correcting or removing inaccurate, incomplete, irrelevant, duplicated, or improperly formatted data. | Improves data quality, reduces errors. | Can be time-consuming, requires expertise. |

| Cross-referencing | Comparing data from multiple sources to identify inconsistencies. | Increases data reliability, identifies errors. | Requires access to multiple data sources. |

| Statistical Analysis | Identifying outliers and anomalies using statistical methods. | Detects unusual patterns, highlights potential errors. | Requires statistical expertise, can be complex. |

| Visual Inspection | Manually reviewing data for errors and inconsistencies. | Simple, effective for small datasets. | Time-consuming, prone to human error for large datasets. |

Model Structure and Design

A well-structured financial model is crucial for accuracy, transparency, and ease of use. A poorly designed model, conversely, can lead to errors, confusion, and wasted time. This section will cover key aspects of designing robust and maintainable financial models.

Modular Model Design

Adopting a modular design significantly improves a model’s usability and maintainability. Instead of a single, monolithic worksheet, a modular approach breaks down the model into smaller, self-contained sections or modules. Each module performs a specific function, such as calculating revenue, expenses, or debt servicing. This allows for easier troubleshooting, updates, and collaboration. For example, a revenue module might contain separate sub-modules for each product line, simplifying the process of making changes to individual product forecasts. The benefits include improved readability, easier debugging, and the ability to reuse modules across different models.

Worksheet Organization and Naming Conventions

Clear organization and consistent naming are paramount for model clarity. Worksheets should be logically grouped and named descriptively. For example, instead of “Sheet1,” use names like “Revenue Projections,” “Cost of Goods Sold,” or “Balance Sheet.” Inputs should be clearly separated from calculations and outputs. Consider using color-coding or other visual cues to further enhance organization. A consistent naming convention for cells and ranges (e.g., using prefixes like “Rev_” for revenue, “COGS_” for cost of goods sold) improves readability and reduces the risk of errors.

Effective Use of Formulas and Functions

Mastering Excel formulas and functions is essential for building efficient and accurate models. Leveraging built-in functions like SUM, IF, VLOOKUP, and INDEX/MATCH can significantly reduce the number of manual calculations and minimize the risk of errors. Using named ranges instead of cell references makes formulas more readable and easier to understand. For instance, instead of `=SUM(A1:A100)`, use `=SUM(TotalRevenue)`, where “TotalRevenue” is a named range referring to cells A1:A100. Furthermore, using array formulas can perform complex calculations efficiently, avoiding the need for many helper columns. For example, an array formula could efficiently sum values based on multiple criteria without the need for intermediate steps.

Pitfalls of Overly Complex Models

While sophisticated models can be powerful, excessive complexity can lead to several problems. Overly complex models are difficult to understand, audit, and maintain. They are more prone to errors, and debugging can become a time-consuming nightmare. Over-reliance on complex formulas can obscure the underlying logic, making it challenging to identify and correct errors. Simplicity and clarity should always be prioritized over unnecessary complexity. A simpler model that accurately reflects the key drivers of the business is always preferable to a highly complex model prone to errors.

Building a Clear and Concise Model Narrative

A well-written narrative accompanying the model is crucial for understanding its purpose, assumptions, and limitations. This narrative should clearly explain the model’s structure, the key inputs and outputs, and the underlying assumptions. It should also document any limitations or potential biases. Including clear descriptions of the calculations performed and the sources of the data used further enhances transparency and credibility. The narrative should be concise and easy to understand, even for users who are not experts in financial modeling. This enhances the model’s value as a communication tool, enabling stakeholders to easily grasp its insights and implications.

Assumptions and Sensitivity Analysis

A robust financial model relies heavily on the accuracy and transparency of its underlying assumptions. Clearly documenting these assumptions is crucial for model understanding, validation, and the assessment of potential risks. Sensitivity analysis, then, allows us to systematically evaluate the impact of changes in these assumptions on the model’s outputs, providing valuable insights for decision-making.

Documenting Model Assumptions

All assumptions underpinning a financial model should be explicitly stated and justified. This documentation should be clear, concise, and easily accessible to all stakeholders. The rationale behind each assumption should be explained, along with any data sources or methodologies used to derive them. This level of detail promotes transparency and allows for easy auditing and modification as new information becomes available. Failing to document assumptions can lead to misunderstandings, misinterpretations, and ultimately, flawed decision-making.

Examples of Key Assumptions in Financial Models

The specific assumptions incorporated into a financial model depend heavily on its purpose. For instance, a discounted cash flow (DCF) model for valuing a company might include assumptions about revenue growth rates, operating margins, capital expenditures, and the discount rate. A leveraged buyout (LBO) model would incorporate assumptions about debt financing terms, interest rates, and exit multiples. A pro forma income statement might include assumptions about sales growth, cost of goods sold, and operating expenses. In each case, the selection and justification of assumptions are critical for the model’s credibility.

Methods for Performing Sensitivity Analysis

Sensitivity analysis systematically examines the impact of changes in key input variables on the model’s output. Common methods include:

- One-at-a-time (OAT) analysis: This involves changing one input variable at a time while holding all other variables constant. This approach provides a clear understanding of the individual impact of each variable.

- Scenario analysis: This method involves defining different plausible scenarios based on various combinations of input variables. This allows for a more holistic assessment of risk. For example, a best-case, base-case, and worst-case scenario could be modeled.

- Monte Carlo simulation: This sophisticated technique uses random sampling to generate a probability distribution of possible outcomes, providing a more comprehensive understanding of the uncertainty associated with the model’s output. This is particularly useful when dealing with multiple interdependent variables.

Illustrative Impact of Assumption Changes

The following table illustrates the impact of changes in key assumptions on the net present value (NPV) of a hypothetical investment project.

| Assumption | Base Case | Optimistic Case (+10%) | Pessimistic Case (-10%) |

|---|---|---|---|

| Revenue Growth Rate | 5% | 5.5% | 4.5% |

| Discount Rate | 10% | 9% | 11% |

| Initial Investment | $1,000,000 | $1,000,000 | $1,000,000 |

| Net Present Value (NPV) | $200,000 | $280,000 | $110,000 |

Visual Representation of Sensitivity Analysis

A tornado diagram provides a clear visual representation of the sensitivity analysis results. The diagram would consist of horizontal bars, each representing a key input variable. The length of each bar represents the magnitude of the impact of a +/- 10% change in that variable on the NPV. The longest bars represent the most sensitive variables. For example, the revenue growth rate might have the longest bar, indicating that it has the largest impact on the NPV, followed by the discount rate, and then the initial investment with the shortest bar. The diagram clearly highlights which variables exert the most significant influence on the model’s output, guiding the focus of further analysis and risk management efforts.

Documentation and Communication

Effective documentation and clear communication are crucial for the success of any financial model. A well-documented model ensures transparency, facilitates collaboration, and allows for easier auditing and future modifications. Conversely, poor communication can lead to misinterpretations, incorrect decisions, and ultimately, financial losses. This section Artikels best practices for documenting and communicating financial model results to various stakeholders.

Model Documentation Best Practices

Comprehensive model documentation is essential for understanding the model’s logic, assumptions, and limitations. This includes a detailed description of the model’s purpose, inputs, calculations, outputs, and any underlying assumptions. A well-structured documentation report should be easily navigable and understandable by both technical and non-technical users. It should also include a clear version control system to track changes and updates.

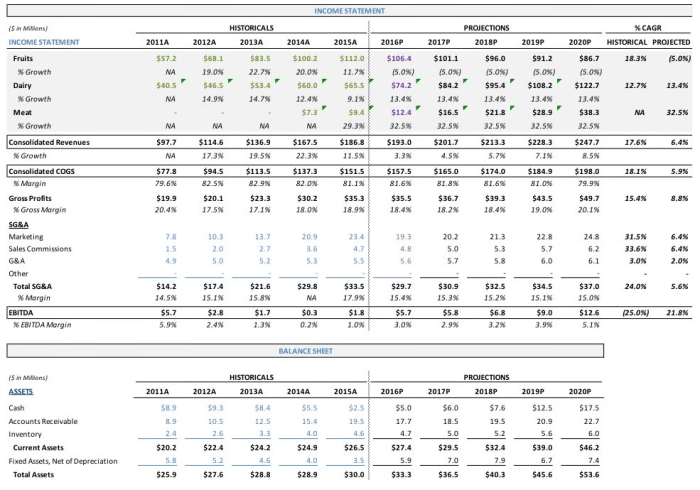

Clear and Concise Model Outputs

Model outputs should be presented in a clear, concise, and easily understandable manner. Avoid overwhelming stakeholders with excessive data; instead, focus on presenting key findings and insights in a visually appealing and easily digestible format. This might involve summarizing complex data into key performance indicators (KPIs) or using charts and graphs to highlight important trends and patterns. For example, instead of presenting a massive spreadsheet of raw data, focus on presenting a summary table highlighting key financial metrics such as Net Present Value (NPV), Internal Rate of Return (IRR), and Payback Period.

Effective Communication of Model Results to Stakeholders

Effective communication involves tailoring the presentation of model results to the specific audience. For example, a presentation to senior management might focus on high-level summaries and key strategic implications, while a presentation to a technical team might delve into the model’s underlying mechanics and assumptions. Using a combination of verbal explanations, visual aids, and written reports can enhance understanding and facilitate informed decision-making. Consider using storytelling techniques to make the data more engaging and memorable. For instance, when presenting a forecast for sales growth, connect the projected numbers to real-world market trends or company initiatives.

Model Documentation Report Template

A comprehensive model documentation report should include the following sections:

| Section | Content |

|---|---|

| Executive Summary | A brief overview of the model’s purpose, key findings, and limitations. |

| Model Purpose and Objectives | A clear statement of the model’s intended use and the questions it aims to answer. |

| Data Sources and Methodology | A detailed description of the data sources used, data validation techniques employed, and the model’s calculation methodology. |

| Assumptions and Limitations | A transparent discussion of the key assumptions underlying the model and its limitations. |

| Model Outputs and Interpretation | A clear explanation of the model’s outputs and how they should be interpreted. |

| Sensitivity Analysis | An analysis of the model’s sensitivity to changes in key input variables. |

| Appendix | Supporting documentation, such as data tables, detailed calculations, and source code. |

Using Clear Visual Aids

Visual aids are crucial for effectively communicating complex information. For instance, a bar chart can clearly show the relative contribution of different revenue streams, while a line chart can illustrate trends over time. A well-designed dashboard can present multiple key performance indicators (KPIs) in a concise and easily understandable format. For example, imagine a dashboard showing projected revenue growth over five years, alongside projected profit margins and market share. The dashboard could use different colored lines to represent different scenarios (e.g., best-case, base-case, worst-case) and use clear labels and legends to ensure easy interpretation. Another example could be a heatmap illustrating the correlation between different variables, visually highlighting strong positive or negative relationships. These visual aids allow stakeholders to quickly grasp the key insights without needing to delve into detailed spreadsheets or reports.

Model Auditing and Review

Regular audits are crucial for maintaining the accuracy, reliability, and overall integrity of financial models. Without them, even the most meticulously constructed models can become unreliable over time, leading to flawed decision-making. A robust audit process helps identify errors, ensures compliance with standards, and enhances confidence in the model’s outputs.

Importance of Regular Model Audits

Regular model audits are essential for identifying and correcting errors before they impact critical decisions. They provide an independent assessment of the model’s design, assumptions, and calculations, ensuring that the model continues to accurately reflect the underlying financial reality. The frequency of audits should depend on the model’s complexity, the volatility of the underlying data, and the criticality of the decisions based on the model’s output. For instance, a high-stakes model used for merger and acquisition valuations might require quarterly audits, while a simpler budgeting model might only need an annual review.

Common Errors Found During Model Reviews

Model reviews often uncover a range of errors, from simple data entry mistakes to more complex logical flaws. Common errors include incorrect formulas, inconsistent data, flawed assumptions, inadequate documentation, and a lack of appropriate sensitivity analysis. For example, a simple transposition error in a cell reference can cascade through the model, producing significantly inaccurate results. Similarly, using outdated or incorrect data sources can lead to unreliable projections. Another frequent issue is the lack of clear and comprehensive documentation, making it difficult to understand the model’s logic and assumptions.

Methods for Ensuring Model Accuracy and Reliability

Several methods contribute to model accuracy and reliability. These include rigorous data validation and cleansing procedures, using robust and well-tested formulas, implementing version control to track changes, and performing thorough sensitivity analysis to assess the impact of varying assumptions. Regular independent review by someone unfamiliar with the model’s creation can also provide valuable insights and catch errors easily missed by the original builder. Furthermore, incorporating automated checks and validations within the model itself can significantly reduce the risk of human error. For instance, a simple check could flag any negative cash flows, which would be highly unusual in certain contexts.

Steps Involved in a Thorough Model Audit

A thorough model audit typically involves several key steps. First, the auditor reviews the model’s documentation to understand its purpose, design, and assumptions. Next, they independently validate the data sources and ensure their accuracy and consistency. The auditor then verifies the accuracy of the formulas and calculations, checking for logical errors and inconsistencies. Sensitivity analysis is performed to assess the model’s robustness under different scenarios. Finally, the auditor documents their findings and provides recommendations for improvement. This entire process should be well documented, with a clear audit trail of all changes and findings.

Model Audit Checklist

A comprehensive model review should include a checklist to ensure all aspects are covered. This checklist could include items such as:

- Verification of data sources and accuracy.

- Review of formulas and calculations for correctness.

- Assessment of assumptions and their impact on results.

- Examination of the model’s structure and logic.

- Evaluation of the documentation’s clarity and completeness.

- Testing of the model’s sensitivity to changes in inputs.

- Confirmation that the model’s outputs are reasonable and consistent with expectations.

- Review of the model’s version control and change management process.

- Assessment of compliance with relevant regulations and standards.

Software and Tools

The choice of software and tools significantly impacts the efficiency, accuracy, and maintainability of a financial model. Selecting the right tools and mastering their functionalities are crucial for building robust and reliable models. This section will explore various software packages, spreadsheet functions, add-ins, and best practices for efficient model development.

Comparison of Financial Modeling Software Packages

Several software packages cater to financial modeling needs, each with its strengths and weaknesses. Microsoft Excel remains the industry standard, offering a familiar interface and extensive functionality. However, more specialized software like Alteryx, Python with libraries like Pandas and NumPy, and dedicated financial modeling platforms offer enhanced capabilities for complex analyses and large datasets. Excel’s accessibility and widespread use make it advantageous for collaboration and ease of understanding, while specialized software often provides superior performance and automation features for intricate models. For instance, Alteryx excels in data preparation and cleansing, streamlining the data import process that often consumes significant time in Excel. Python offers unmatched flexibility and scalability for handling extremely large datasets and performing advanced statistical analysis, something that would be cumbersome in Excel.

Advantages and Disadvantages of Spreadsheet Functions

Spreadsheet functions are fundamental building blocks of financial models. Functions like SUM, IF, VLOOKUP, and INDEX-MATCH are essential for data manipulation and calculation. However, over-reliance on nested functions can lead to complex and difficult-to-audit models. While functions like SUMPRODUCT offer concise solutions for array calculations, their complexity can hinder readability and debugging. Conversely, simpler functions, while potentially requiring more lines of code, improve transparency and maintainability. For example, using multiple SUM functions instead of a single, complex SUMPRODUCT function might increase the number of lines but significantly enhances model readability and the ability to identify errors.

Utilizing Add-ins and Macros to Enhance Model Efficiency

Add-ins and macros significantly enhance model efficiency by automating repetitive tasks and adding specialized functionalities. Excel’s Analysis Toolpak provides statistical functions not available in the standard package. Custom macros can automate data import, validation, and report generation. However, poorly designed macros can introduce errors and make models harder to maintain. Well-documented and modular macros are essential for ensuring model integrity and ease of use. For example, a macro could automatically download data from a financial website, clean it, and input it directly into the model, eliminating manual data entry and reducing the risk of human error.

Best Practices for Using Excel in Financial Modeling

Excel’s versatility makes it crucial to adopt best practices. Employing clear and consistent naming conventions for sheets and cells is paramount for readability. Using cell referencing instead of hardcoding values improves model flexibility and reduces the risk of errors. Regular data validation ensures data accuracy and prevents inconsistencies. Implementing robust error handling mechanisms helps identify and address potential issues. For example, using data validation to restrict input values to specific ranges prevents incorrect data from being entered. Furthermore, using conditional formatting can highlight potential errors or inconsistencies, aiding in quick identification and resolution.

Workflow for Efficient Financial Model Building in Excel

An efficient workflow begins with meticulous planning. Define the model’s scope and objectives clearly. Design the model structure logically, separating data input, calculations, and outputs into distinct sections. Use named ranges to improve readability and maintainability. Implement robust error handling and data validation. Thoroughly test the model with various inputs and scenarios. Finally, document the model comprehensively, explaining the assumptions, formulas, and data sources. This structured approach ensures a robust and easily understandable model. For example, a project could begin with defining key performance indicators (KPIs), gathering data, creating input sheets, developing calculation sections, and finally, creating an output sheet summarizing the key findings. Each step should be meticulously documented, ensuring that the model is transparent and easily understood by others.

Specific Modeling Techniques

Financial modeling relies on several key techniques to analyze and forecast financial performance. Understanding and correctly applying these techniques is crucial for creating robust and reliable models. This section will explore some of the most commonly used methods, highlighting their applications and best practices.

Discounted Cash Flow (DCF) Analysis

DCF analysis is a fundamental valuation method used to estimate the value of an investment based on its expected future cash flows. The core principle is that money received today is worth more than the same amount received in the future due to its potential earning capacity. This is reflected in the discount rate, which accounts for the time value of money and risk. A DCF model typically projects free cash flows (FCF) for a specified period, then discounts these flows back to their present value using a discount rate, often the weighted average cost of capital (WACC). The sum of these present values represents the estimated intrinsic value of the investment.

The basic DCF formula is: Value = Σ [FCFt / (1 + r)t]

where FCFt represents the free cash flow in period t, r is the discount rate, and t is the time period. A key best practice is to carefully select the appropriate discount rate, considering the risk profile of the investment and market conditions. Furthermore, thorough sensitivity analysis should be conducted to assess the impact of changes in key assumptions, such as growth rates and discount rates, on the final valuation. For example, a company projecting high growth rates would require a higher discount rate to reflect the increased risk.

Leveraged Buyout (LBO) Model

An LBO model simulates the financial structure and performance of a leveraged buyout transaction. This involves acquiring a company using a significant amount of debt financing. The model projects the target company’s cash flows, debt repayment schedule, and returns to equity investors over the investment horizon. Key inputs include the purchase price, debt financing terms (e.g., interest rates, maturity dates), and projected operating performance. The model typically assesses the feasibility and profitability of the LBO by analyzing key metrics such as return on invested capital (ROIC), internal rate of return (IRR), and net present value (NPV). A critical best practice is to carefully consider the impact of various debt structures and interest rate scenarios on the model’s outputs. For instance, an LBO model might analyze the impact of different levels of leverage on the IRR, showing how a higher debt burden increases the risk but also the potential return.

Monte Carlo Simulation in Financial Modeling

Monte Carlo simulation is a powerful technique used to assess the impact of uncertainty on financial forecasts. It involves running a model numerous times, each time using randomly generated inputs based on specified probability distributions for key variables (e.g., sales growth, operating margins, discount rates). This generates a distribution of possible outcomes, providing a more comprehensive understanding of the range of potential results than a deterministic model. A key application is in valuing assets or projects under uncertainty. For example, a real estate investment might use Monte Carlo simulation to model the variability in rental income and property values, producing a distribution of potential returns. A best practice is to ensure the probability distributions used accurately reflect the uncertainty associated with the relevant variables.

Comparison of Valuation Methodologies

Several valuation methodologies exist, each with its strengths and weaknesses. DCF analysis focuses on intrinsic value based on projected cash flows, while relative valuation compares a company’s valuation multiples (e.g., price-to-earnings ratio) to those of comparable companies. Precedent transactions analysis uses the sale prices of similar companies to estimate a target company’s value. The choice of methodology depends on the specific context, the availability of data, and the goals of the analysis. For example, a DCF model might be more appropriate for valuing a stable, established company with predictable cash flows, while relative valuation might be more suitable for valuing a company in a rapidly evolving industry with limited historical data. A best practice is to use multiple valuation approaches to arrive at a more robust valuation range. Inconsistencies across methods can highlight areas needing further investigation.

Final Wrap-Up

Building accurate and reliable financial models is a cornerstone of effective financial management. By implementing the best practices Artikeld in this guide – from meticulous data validation to clear communication of results – you can significantly improve the quality and impact of your financial modeling. Remember, a well-structured, well-documented model is not just a tool for analysis; it’s a valuable asset that promotes transparency, facilitates collaboration, and ultimately supports better decision-making across your organization.

FAQ Summary

What are the most common errors in financial models?

Common errors include incorrect formulas, inconsistent data, flawed assumptions, and inadequate documentation. These can lead to inaccurate projections and flawed decision-making.

How often should a financial model be audited?

The frequency of audits depends on the model’s complexity and criticality. Regular reviews, at least annually, are recommended for complex models used for significant decisions.

What software is best for financial modeling?

Microsoft Excel remains a popular choice, offering extensive functionality and wide accessibility. Specialized software packages like Bloomberg Terminal or dedicated financial modeling platforms offer more advanced features but may have a steeper learning curve.

How can I improve the communication of my model’s results?

Use clear and concise language, visually appealing charts and graphs, and avoid technical jargon when communicating with non-technical stakeholders. A well-structured presentation summarizing key findings is crucial.