Financial Modeling Best Practices: Let’s face it, spreadsheets aren’t exactly known for their thrilling narratives. But fear not, intrepid number-cruncher! This isn’t your grandpappy’s accounting class. We’re diving headfirst into the surprisingly exciting world of financial modeling, where accurate data meets elegant design, and where even the most complex formulas can be tamed (mostly). Prepare for a journey into a world where sensitivity analysis isn’t just for the faint of heart, and where even the dreaded #REF! error can be vanquished with a well-placed formula and a healthy dose of laughter.

This guide will equip you with the essential skills and best practices to create robust, reliable, and (dare we say) even beautiful financial models. We’ll tackle everything from data validation (because garbage in, garbage out is a universal truth, even in finance) to model design, ensuring your models are not only accurate but also understandable by even the most spreadsheet-phobic executives. Get ready to transform your financial modeling from a tedious chore into a surprisingly satisfying (and potentially lucrative) endeavor.

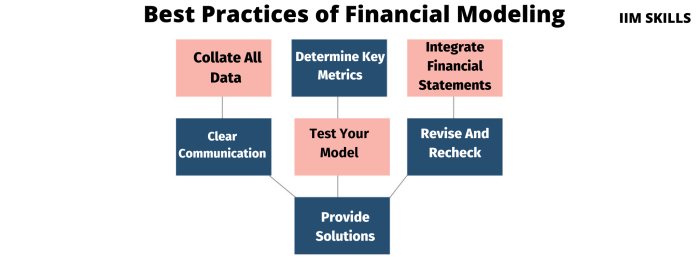

Data Integrity and Validation

Financial modeling is a delicate dance—a precise ballet of numbers where even a misplaced decimal can lead to a financial fiasco. To avoid such a catastrophic performance, ensuring data integrity and validation is paramount. Think of it as the rigorous rehearsal before the big show, making sure every step is flawlessly executed. Without it, your model, no matter how elegantly constructed, is essentially a house built on sand.

Data cleansing and error detection are not mere afterthoughts; they are the bedrock upon which accurate financial projections are built. Failing to address data quality issues is akin to using a rusty, inaccurate compass to navigate a financial ocean – you’re likely to end up stranded, far from your intended destination.

Common Data Errors and Their Impact

Inaccurate or incomplete data can lead to significantly flawed model results. Imagine projecting revenue growth based on outdated market data, or forecasting expenses without accounting for inflation. The consequences can range from minor inaccuracies to disastrous miscalculations, impacting investment decisions, strategic planning, and ultimately, profitability. For example, a simple transposition error in a sales figure (e.g., entering $10,000 as $100,000) could inflate revenue projections significantly, leading to overly optimistic forecasts and potentially misguided investment strategies. Similarly, neglecting to account for seasonal variations in sales can lead to inaccurate predictions, especially if the model is used to inform inventory management or production planning.

Methods for Validating Data Sources and Ensuring Accuracy

Several methods exist to ensure data accuracy. First, always cross-reference data from multiple sources. Don’t rely solely on a single data point; instead, triangulate your information to ensure consistency. Next, perform reasonableness checks. Do the numbers make logical sense in the context of the business and industry? Are the growth rates realistic? If something seems off, investigate further. Employing automated data validation tools can also greatly enhance accuracy. These tools can identify inconsistencies, outliers, and potential errors, significantly reducing the risk of human error. Regular data audits, perhaps on a quarterly basis, help identify and address inconsistencies before they snowball into larger problems.

Documenting Data Sources and Transformations

Proper documentation is crucial. Imagine trying to debug a model without knowing where the data came from or how it was manipulated. It’s a recipe for disaster! A comprehensive data dictionary should be created, meticulously documenting each data source, its format, any transformations applied (e.g., currency conversions, inflation adjustments), and the date of the last update. This document serves as a living record of the data’s journey, enabling easy traceability and facilitating audits. Think of it as the model’s detailed backstage pass, revealing the story behind the numbers.

Data Validation Checklist

Before unleashing your financial model upon the world, consider this checklist:

- Source Verification: Have all data sources been verified for accuracy and reliability?

- Data Cleansing: Have missing values been addressed, and have outliers been investigated and handled appropriately?

- Consistency Checks: Has the data been checked for internal consistency across different sources and within the model itself?

- Reasonableness Checks: Do the numbers make logical sense within the context of the business and industry?

- Error Detection: Have automated validation tools been used to identify potential errors?

- Documentation: Is there comprehensive documentation of all data sources, transformations, and assumptions?

Model Structure and Design

Building a financial model is like constructing a skyscraper – a haphazard pile of numbers will crumble under scrutiny, while a well-structured model stands tall and proud, weathering even the fiercest market storms. A robust model structure is crucial for accuracy, auditability, and, dare we say it, sanity.

The benefits of a modular design are numerous, offering a level of elegance that would make even a seasoned architect weep with joy. Think of it as assembling Lego bricks rather than wrestling with a giant, unwieldy block of…well, numbers.

Modular Model Design

A modular design breaks down the model into smaller, self-contained units, each focusing on a specific aspect of the financials. This approach promotes clarity, making it easier to understand, debug, and update individual components without disrupting the entire structure. Changes are localized, minimizing the risk of cascading errors – a feature that will undoubtedly keep your hair in place. Imagine trying to fix a faulty wire in a complex circuit versus replacing a single, easily identifiable module. The modular approach offers that same level of simplicity and efficiency.

Comparison of Top-Down and Bottom-Up Approaches

Two primary approaches exist for structuring financial models: top-down and bottom-up. The top-down approach starts with high-level assumptions and aggregates them down to detailed financial statements. This method is efficient for initial estimations but might lack the granularity needed for detailed analysis. The bottom-up approach, conversely, begins with detailed line items and aggregates them upwards. This approach offers greater precision but can be more time-consuming. The ideal approach often involves a hybrid strategy, combining the strengths of both. For example, a high-level revenue projection might be top-down, while detailed cost breakdowns are bottom-up.

Model Documentation: The Unsung Hero

Clear and concise documentation is the unsung hero of any successful financial model. It’s the roadmap that guides you (and anyone else who dares to venture into your model’s depths) through the model’s logic, assumptions, and calculations. Without it, your masterpiece will become an impenetrable fortress, leaving you stranded in a sea of cells and formulas. Think of it as the instruction manual for your financial masterpiece. Proper documentation ensures future modifications are easier, collaboration is smoother, and your sanity remains intact.

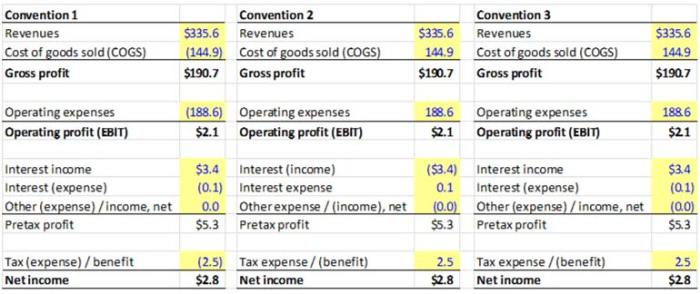

Naming Conventions and Cell Referencing

Naming conventions and cell referencing are the building blocks of a well-organized model. Using descriptive names (e.g., “Revenue_2024” instead of “A12”) makes your model instantly understandable. Similarly, consistent cell referencing practices (e.g., using absolute references where appropriate) ensure that your formulas don’t break when you add or delete rows or columns. A chaotic naming scheme is a recipe for disaster; clear, consistent naming conventions prevent this.

Sample Financial Model: A Modular Masterpiece

Let’s illustrate a modular approach with a simple example. This model is broken down into key modules: Revenue, Costs, and Profitability.

| Module | Description | Inputs | Outputs |

|---|---|---|---|

| Revenue | Projects revenue based on unit sales and pricing. | Unit Sales, Price per Unit | Total Revenue |

| Costs | Calculates total costs, including fixed and variable costs. | Fixed Costs, Variable Cost per Unit, Unit Sales | Total Costs |

| Profitability | Calculates key profitability metrics. | Total Revenue, Total Costs | Gross Profit, Net Profit Margin |

The interdependencies are clear: Revenue and Costs feed into Profitability. This modular structure allows for easy updates and analysis of individual components. For example, changing the pricing strategy only requires modifying the “Revenue” module, leaving the other modules untouched – a testament to the power of modular design.

Assumptions and Sensitivity Analysis

Financial models, much like a soufflé, are delicate creations. A single misplaced assumption can cause the whole thing to collapse. Therefore, understanding and managing assumptions, and rigorously testing their impact, is crucial for building robust and reliable models. This section delves into the best practices for documenting, justifying, and analyzing the assumptions underpinning your financial projections. We’ll explore various sensitivity analysis techniques and visualize the results to understand how changes in key inputs affect the model’s output. Prepare for a rollercoaster ride of financial enlightenment (with minimal nausea, we promise).

Documenting and Justifying Model Assumptions

Clearly documenting assumptions is paramount. Imagine trying to debug a model without knowing the initial parameters! Each assumption should be explicitly stated, along with a clear justification based on market research, historical data, expert opinions, or other credible sources. This not only aids in model transparency but also allows for easier auditing and future modifications. For instance, if you’re assuming a 5% annual revenue growth rate, provide supporting evidence from industry reports or company forecasts. A simple, well-formatted table detailing each assumption, its source, and its rationale is an excellent approach.

Performing Sensitivity Analysis to Assess Model Robustness

Sensitivity analysis is the art of poking and prodding your model to see how it reacts. By systematically changing key input variables, you can assess the model’s robustness and identify the assumptions that have the most significant impact on the results. This helps to understand the range of possible outcomes and to highlight areas where further investigation or refinement might be needed. A model that remains relatively stable despite significant changes in input variables is a strong model; one that wildly fluctuates suggests a need for further scrutiny.

Examples of Sensitivity Analysis Techniques

Several techniques exist for performing sensitivity analysis, each offering a different perspective.

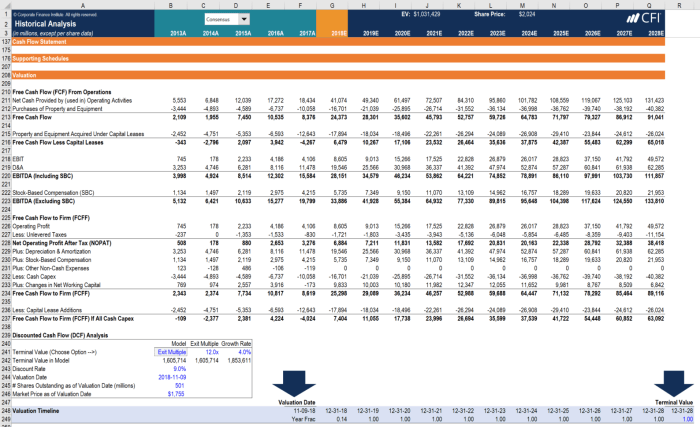

- One-way sensitivity analysis: This involves changing one input variable at a time while holding all others constant. This is a straightforward approach that provides a clear understanding of the individual impact of each variable. Imagine changing the discount rate in a DCF model – this technique allows us to see how sensitive the net present value is to variations in this specific rate.

- Two-way sensitivity analysis: This method simultaneously varies two input variables, revealing the interaction between them. For example, you might examine the impact of varying both revenue growth and operating margins on profitability. This helps identify scenarios where the combined effect of two variables might lead to unexpected outcomes.

- Monte Carlo Simulation: This sophisticated technique uses random sampling to generate a range of possible outcomes, considering the uncertainty associated with each input variable. It provides a probability distribution of the model’s output, illustrating the likelihood of different scenarios. Think of it as running your model thousands of times with slightly different inputs each time, providing a more holistic view of the uncertainty inherent in forecasting.

Visual Representation of Sensitivity Analysis Results

A picture is worth a thousand numbers (especially in finance). Visualizing the results of your sensitivity analysis using charts and graphs makes it easier to understand and communicate the model’s behavior. For example, a tornado chart can visually rank the variables according to their impact on the output. Scatter plots can illustrate the relationship between two input variables and the resulting output. A cumulative probability distribution graph, from a Monte Carlo simulation, can illustrate the likelihood of exceeding or falling below certain thresholds. These visualizations provide a clear, concise summary of the model’s sensitivity and its potential range of outcomes. For instance, a tornado chart might show that changes in discount rate have a much larger impact on NPV than changes in revenue growth, indicating where to focus further refinement.

Summary Table of Key Assumptions and Sensitivity Analysis

| Assumption | Potential Impact | Sensitivity Analysis Performed | Results Summary |

|---|---|---|---|

| Discount Rate (10%) | Significant impact on NPV | One-way and Monte Carlo simulation | NPV drops by 20% if rate increases to 12% |

| Revenue Growth (5%) | Moderate impact on profitability | One-way and Two-way (with operating margin) | Profitability is more sensitive to revenue growth when operating margins are low |

| Operating Margin (20%) | Moderate impact on profitability | One-way and Two-way (with revenue growth) | Profitability less sensitive to margin changes compared to revenue growth |

| Market Share (15%) | Minor impact on overall revenue | One-way analysis | Minimal impact on overall model output |

Formula Auditing and Error Checking

Financial modeling isn’t just about crunching numbers; it’s about building a robust, reliable, and (let’s be honest) somewhat elegant structure. A single misplaced parenthesis can send your projections into the stratosphere, leaving you looking like you predicted the next Bitcoin bubble (when you actually just misplaced a decimal). Therefore, a rigorous auditing process is crucial – think of it as a financial model’s annual health check-up.

Formula auditing and error checking are the unsung heroes of accurate financial modeling. They’re the diligent detectives that sniff out those sneaky errors before they wreak havoc on your carefully constructed masterpiece. This section will explore practical methods to ensure your model’s formulas are as sound as a Swiss bank.

Methods for Auditing Formulas and Identifying Potential Errors

Effective formula auditing involves a multi-pronged approach. First, visually inspect your formulas for obvious errors like typos or incorrect cell references. Think of it as proofreading your model – a second pair of eyes (or even a third!) is invaluable. Next, utilize the trace precedents and trace dependents features available in most spreadsheet software. These tools visually map the flow of data within your model, highlighting dependencies and revealing potential issues. For instance, if you see a cell referencing a blank cell, you know there’s a problem. Finally, leverage data validation rules to ensure data input adheres to predefined criteria. This helps prevent errors from creeping into your model at the source. Imagine this as a bouncer at the club, only letting in legitimate data.

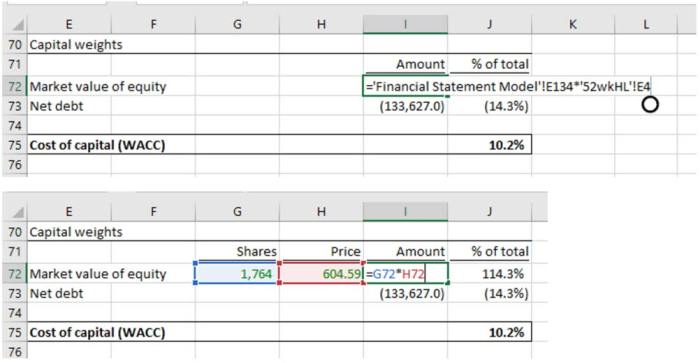

Utilizing Formula Auditing Tools in Spreadsheet Software, Financial Modeling Best Practices

Most spreadsheet software packages (like Excel and Google Sheets) are equipped with powerful formula auditing tools. These tools allow you to trace precedents and dependents, identify circular references (where a formula directly or indirectly refers to itself – a recipe for disaster!), and even evaluate formulas step-by-step. For example, Excel’s “Formula Auditing” group within the “Formulas” tab offers a range of tools, including “Trace Precedents,” “Trace Dependents,” and “Error Checking.” These tools are your secret weapons in the fight against formula errors. Learning to effectively use them is a must for any serious modeler.

Common Formula Errors and Their Solutions

Let’s face it, errors happen. But knowing the common culprits can help you avoid them. One frequent offender is the dreaded “#REF!” error, usually indicating a broken cell reference. This happens when a cell you’re referencing is deleted or moved. Another common mistake is using incorrect operators. Confusing “+” and “-” can lead to significantly inaccurate results. For example,

`=SUM(A1-B1)`

instead of

`=SUM(A1+B1)`

will obviously lead to the wrong result. Circular references, as mentioned earlier, are another major headache. These occur when a formula directly or indirectly refers to its own cell. The solution? Carefully review your formula structure, and if necessary, restructure your model to break the circular dependency.

Peer Review Process for Financial Models

Think of a peer review as a quality control checkpoint for your model. Before unleashing your masterpiece on the world (or your boss), have a colleague review your work. This fresh perspective can often catch errors you’ve missed. Establish a clear checklist for reviewers to follow, focusing on formula accuracy, data integrity, and overall model logic. This process not only helps identify errors but also fosters a culture of collaboration and continuous improvement.

Best Practices for Preventing Common Formula Errors

Preventing errors is always better than fixing them. Here are some best practices:

- Use absolute and relative cell referencing consistently and correctly.

- Employ clear and concise formula structures.

- Thoroughly document your formulas and assumptions.

- Regularly back up your model.

- Test your model with various data inputs.

- Use named ranges to improve readability and reduce errors.

Following these practices will significantly reduce the likelihood of errors and make your models more robust and reliable. Think of it as building a house – a solid foundation and careful construction are key to avoiding costly repairs later.

Version Control and Collaboration

Financial modeling, at its core, is a collaborative endeavor, often involving multiple analysts, reviewers, and stakeholders. Imagine a single spreadsheet, constantly evolving, with everyone adding their two cents (and potentially their two thousand errors!). Chaos, my friends, pure, unadulterated chaos. This is where version control steps in, saving the day (and your sanity). Proper version control ensures that everyone is working with the most up-to-date information, prevents accidental overwrites, and allows for easy tracking of changes – a crucial element in maintaining model integrity and accountability.

Version control in financial modeling is not merely a good idea; it’s a necessity. It’s the difference between a smoothly running financial engine and a fiery wreck of spreadsheet catastrophe. Without it, you risk losing hours, days, or even weeks of work to a simple mishap. Furthermore, in the face of regulatory scrutiny, a robust version control system offers irrefutable proof of your model’s evolution and the rationale behind its key assumptions.

Version Control Methods and Applicability

Several methods exist for managing model versions. The simplest involves manually saving different versions with descriptive names (e.g., “Model_v1_Initial”, “Model_v2_RevisedAssumptions”). While seemingly straightforward, this approach quickly becomes unwieldy for complex models with frequent updates. More sophisticated methods leverage version control systems (VCS), like Git (commonly used in software development but adaptable to financial models) or dedicated spreadsheet versioning add-ins. These systems automatically track changes, allowing for easy reversion to previous versions and collaborative editing. The choice of method depends on the model’s complexity, the number of collaborators, and the level of control required. For smaller, less complex models, manual versioning might suffice. However, for larger models involving multiple team members, a VCS is strongly recommended to avoid the potential for disastrous conflicts.

Best Practices for Collaborating on Financial Models

Effective collaboration hinges on clear communication, defined roles, and a structured workflow. Establishing a central repository for the model (e.g., a shared network drive or cloud-based storage) is paramount. Clear naming conventions for files and folders help maintain order. Regular check-ins, where team members review and discuss progress, prevent misunderstandings and ensure everyone is on the same page. Consider using collaborative editing tools that allow multiple users to work simultaneously, but be mindful of potential conflicts and the need for careful merging of changes. A well-defined process for reviewing and approving changes, including sign-off procedures, is crucial for ensuring accuracy and compliance.

Managing Different Model Versions and Tracking Changes

A robust version control process involves a systematic approach to managing model versions and documenting changes. Each version should be clearly labeled with a version number and a brief description of the changes made. A change log, either within the model itself or as a separate document, should meticulously record all modifications, including the date, author, and a detailed explanation of the changes. This comprehensive record provides a transparent audit trail, enabling easy tracking of model evolution and assisting in troubleshooting or regulatory reviews. Consider using comments within the model itself to annotate specific cells or formulas, providing context and explanations.

Checklist for Effective Version Control and Collaboration

Before embarking on any significant modeling project, ensure the following:

- Establish a version control system: Choose a method appropriate for the model’s complexity and team size.

- Define clear naming conventions: Use consistent and descriptive names for files and folders.

- Create a central repository: Store the model in a secure and accessible location.

- Implement a change log: Document all modifications with date, author, and a detailed description.

- Establish a review and approval process: Ensure changes are reviewed and signed off before implementation.

- Regularly back up the model: Multiple backups in different locations prevent data loss.

- Communicate effectively: Maintain clear and consistent communication among team members.

- Utilize collaborative editing tools (with caution): Leverage tools that allow for simultaneous editing but establish conflict resolution protocols.

Final Summary: Financial Modeling Best Practices

So, there you have it – a whirlwind tour through the often-overlooked but undeniably crucial world of financial modeling best practices. We’ve journeyed from the meticulous cleaning of data (think of it as a financial spa day for your numbers) to the elegant presentation of results (because even numbers deserve a little flair). Remember, a well-constructed financial model isn’t just about crunching numbers; it’s about communicating insights, making informed decisions, and maybe, just maybe, avoiding a few embarrassing (and potentially costly) errors along the way. Now go forth and model with confidence (and a touch of humor)!

Popular Questions

What’s the best software for financial modeling?

While many options exist, Microsoft Excel remains the industry standard due to its widespread use and extensive functionality. However, specialized software like Python with libraries like Pandas might be preferable for large-scale modeling or complex analyses.

How do I handle circular references in my model?

Circular references, where a formula refers back to its own cell, are a common source of errors. Excel will usually warn you about them. Carefully review your formulas to identify the loop and restructure your calculations to break the cycle. Sometimes iterative calculations can be a solution, but use with caution.

What’s the difference between one-way and two-way sensitivity analysis?

One-way sensitivity analysis changes only one input variable at a time to observe its effect on the output. Two-way analysis simultaneously varies two inputs, providing a more comprehensive view of potential interactions and risks.